Meathooks: Dennett and the Death of Meaning

by rsbakker

Aphorism of the Day: God is myopia, personality mapped across the illusion of the a priori.

.

In Darwin’s Dangerous Idea, Daniel Dennett attempts to show how Darwinism possesses the explanatory resources “to unite and explain everything in one magnificent vision.” To assist him, he introduces the metaphors of the ‘crane’ and the ‘skyhook’ as a general means of understanding the Darwinian cognitive mode and that belonging to its traditional antagonist:

Let us understand that a skyhook is a “mind-first” force or power or process, an exception to the principle that all design, and apparent design, is ultimately the result of mindless, motiveless mechanicity. A crane, in contrast, is a subprocess or a special feature of a design process that can be demonstrated to permit the local speeding up of the basic, slow process of natural selection, and can be demonstrated to be itself the predictable (or retrospectively explicable) product of the basic process. Darwin’s Dangerous Idea, 76

The important thing to note in this passage is that Dennett is actually trying to find some middle-ground, here, between what might be called the ‘top-down’ intuitions, which suggest some kind of essential break between meaning and nature, and ‘bottom-up’ intuitions, which seem to suggest there is no such thing as meaning at all. What Dennett attempts to argue is that the incommensurability of these families of intuitions is apparent only, that one only needs to see the boom, the gantry, the cab, and the tracks, to understand how skyhooks are in reality cranes, the products of Darwinian evolution through and through.

The arch-skyhook in the evolutionary story, of course, is design. What Dennett wants to argue is that the problem has nothing to do with the concept design per se, but rather with a certain way of understanding it. Design is what Dennett calls a ‘Good Trick,’ a way of cognizing the world without delving into its intricacies, a powerful heuristic selected precisely because it is so effective. On Dennett’s account, then, design really looks like this:

And only apparently looks like this:

Design, in other words, is not the problem–design is a crane, something easily explicable in natural terms. The problem, rather, lies in our skyhook conception of design. This is a common strategy of Dennett’s. Even though he’s commonly accused of eliminativism (primarily for his rejection of ‘original intentionality’), a fair amount of his output is devoted to apologizing for the intentional status quo, and Darwin’s Dangerous Idea is filled with some of his most compelling arguments to this effect.

Now I actually think the situation is nowhere near so straightforward as Dennett seems to think. I also believe Dennett’s ‘redefinitional strategy,’ where we hang onto our ‘folk’ terms and simply redefine them in light of incoming scientific knowledge, is more than a little tendentious. But to see this, we need to understand why it is these metaphors of crane and skyhook capture as much of the issue of meaning and nature as they do. We need to take a closer look.

Darwin’s great insight, you could say, was simply to see the crane, to grasp the great, hidden mechanism that explains us all. As Dennett points out, if you find a ticking watch while walking in the woods, the most natural thing in the world is to assume is that you’ve discovered an intentional artifact, a product of ‘intelligent design.’ Darwin’s world-historical insight was to see how natural processes lacking motive, intelligence, or foresight could accomplish the same thing.

But what made this insight so extraordinary? Why was the rest of the crane so difficult to see? Why, in other words, did it take a Darwin to show us something that, in hindsight at least, should have been so very obvious?

Perspective is the most obvious, most intuitive answer. We couldn’t see because we were in no position to see. We humans are ephemeral creatures, with imaginations that can be beggared by mere centuries, let alone the vast, epochal processes that created us. Given our frame of informatic reference, the universe is an engine that idles so low as to seem cold and dead–obviously so. In a sense, Darwin was asking his peers to believe, or at least consider, a rather preposterous thing: that their morphology only seemed fixed, that when viewed on the appropriate scale, it became wax, something that sloshed and spilled into environmental moulds.

A skyhook, on this interpretation, is simply what cranes look like in the fog of human ignorance, an artifact of myopia–blindness. Lacking information pertaining to our natural origins (and what is more, lacking information regarding that lack), we resorted to those intuitions that seemed most immediate, found ways, as we are prone to do, to spin virtue and flattery out of our ignorance. Waste not, want not.

All this should be clear enough, I think. As ‘brights’ we have an ingrained animus against the beliefs of our outgroup competitors. ‘Intelligent design,’ in our circles at least, is what psychologists call an ‘identity claim,’ a way to sort our fellows on perceived gradients of cognitive authority. As such, it’s very easy to redefine, as far as intentional concepts go. Contamination is contamination, terminological or no. And so we have grown used to using the intuitive, which is to say, skyhook, concept of design ‘under erasure,’ as continental philosophers might say–as a mere facon de parler.

But I fear the situation is nowhere quite so easy, that when we take a close look at the ‘skyhook’ structure of ‘design,’ when we take care to elucidate its informatic structure as a kind of perspectival artifact, we have good reason to be uncomfortable–very uncomfortable. Trading in our intuitive concept of design for a scientifically informed one, as Dennett recommends, actually delivers us to a potentially catastrophic implicature, one that only seems innocuous for the very reason our ancestors thought ‘design’ so obvious and innocuous: ignorance and informatic neglect.

On Dennett’s account, design is a kind of ‘stance’–literally, a cognitive perspective–a computationally parsimonious way of making sense of things. He has no problem with relying on intentional concepts because, as we have seen, he thinks them reliable, at least enough for his purposes. For my part, I prefer to eschew ‘stances’ and the like and talk exclusively in terms of heuristics. Why? For one, heuristics are entirely compatible with the mechanistic approach of the life sciences–unlike stances. As such, they do not share the liabilities of intentional concepts, which are much more prone to be applied out of school, and so carry an increased risk of generating conceptual confusion. Moreover, by skirting intentionality, heuristic talk obviates the threat of circularity. The holy grail of cognitive science, after all, is to find some natural (which is to say, nonintentional) way to explain intentionality. But most importantly, heuristics, unlike stances, make explicit the profound role played by informatic neglect. Heuristics are heuristics (as opposed to optimization devices) by virtue of the way they systematically ignore various kinds of information. And this, as we shall see, makes all the difference in the world.

Recall the question of why we needed Darwin to show us the crane of evolution. The crane was so hard to see, I suggested, because our limited informatic frame of reference–our myopic perspective. So then why did we assume design was the appropriate model? Why, in the absence of information pertaining to natural selection, should design become the default explanation of our biological origins as opposed to, say, ‘spontaniety’? When Origin of the Species was published in 1859, for instance, many naturalists actually accepted some notion of purposive evolution; it was natural selection they found offensive, the mindlessness of biological origins. One can cite many contributing factors in answering this question, of course, but looming large over all of them is the fact that design is a natural heuristic, one of many specialized cognitive tools developed by our oversexed, undernourished ancestors.

By rendering the role of informatic neglect implicit, Dennett’s approach equivocates between ‘circumstantial’ and ‘structural’ ignorance, or in other words, between the mere inability to see and blindness proper. Some skyhooks we can dissolve with the accumulation of information. Others we cannot. This is why merely seeing the crane of evolution is not enough, why we must also put the skyhook of intuitive design on notice, quarantine it: we may be born in ignorance of evolution, but we die with the informatic neglect constitutive of design.

Our ignorance of evolution was never a simple matter of ignorance, it was also a matter of human nature, an entrenched mode of understanding, one incompatible with the facts of Darwinian evolution. Design, it seemed, was obviously true, either outright or upon the merest reflection. We couldn’t see the crane of evolution, not simply because we were in no position to see (given our ephemeral nature), but also because we were in position to see something else, namely, the skyhook of design. Think about the two photos I provided above, the way the latter, the skyhook, was obviously an obfuscation of the former, the crane, not merely because you had the original photo to reference, but because you could see that something had been covered over–because you had access, in other words, to information pertaining to the lack of information. The first photo of the crane strikes us as complete, as informatically sufficient. The second photo of the skyhook, however, strikes us as obviously incomplete.

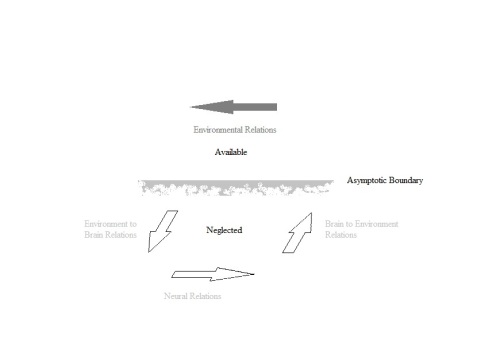

We couldn’t see the crane of evolution, in other words, not just because we were in position to see something else, the skyhook of design, but because we were in position to see something else and nothing else. The second photo, in other words, should have looked more like this:

Enter the Blind Brain Theory. BBT analyzes problems pertaining to intentionality and consciousness in terms of informatic availability and cognitive applicability, in terms of what information we can reasonably expect conscious deliberation to access, and the kinds heuristic limitations we can reasonably expect it to face. Two of the most important concepts arising from this analysis are apparent sufficiency and asymptotic limitation. Since differentiation is always a matter of more information, informatic sufficiency is always the default. We always need to know more, you could say, to know that we know less. The is why intentionality and consciousness, on the BBT account, confront philosophy and science with so many apparent conundrums: what we think we see when we pause to reflect is limned and fissured by numerous varieties of informatic neglect, deficits we cannot intuit. Thus asymptosis and the corresponding illusion of informatic sufficiency, the default sense that we have all the information we need simply because we lack information pertaining to the limits of that information.

This is where I think all those years I spent reading continental philosophy have served me in good stead. This is also where those without any background in continental thought generally begin squinting and rolling their eyes. But the phenomena is literally one we encounter every day–every waking moment in fact (although this would require a separate post to explain). In epistemological terms, it refers to ‘unknown-unknowns,’ or unk-unks as they are called in engineering. In fact, we encountered its cognitive dynamics just above when puzzling through the question of why natural selection, which seems so obvious to us in hindsight, could count as such a revelation prior to 1859. Natural selection, quite simply, was an unknown unknown. Lacking the least information regarding the crane, in other words, meant that the design seemed the only option, the great big ‘it’s-gotta-be’ of early nineteenth century biology.

In a sense, all BBT does is import this cognitive dynamic–call it, the ‘Only-game-in-town Effect’–into human cognition and consciousness proper. In continental philosophy you find this dynamic conceptualized in a variety of ways, as ‘presence’ or ‘identity thinking,’ for example, in its positive incarnation (sufficiency), or as ‘differance’ or ‘alterity’ in its negative (neglect). But as I say, we witness it everywhere in our collective cognitive endeavours. All you need do is think of the way the accumulation of alternatives has the effect of progressively weakening novel interpretations, such as Kant’s say, in philosophy. Kant, who was by no means a stupid man, could actually believe in the power of transcendental deduction to deliver synthetic a priori truths simply because he was the first. It’s interpretative nature only became evident as the variants, such as Fichte’s, began piling up. Or consider the way contextualizing claims, giving them speakers and histories and motives and so on has the strange effect of relativizing them, somehow robbing them of veridical force. Back in my teaching days, I would illustrate the power of unk-unk via a series of recontextualizations. I would give the example of a young man stabbing an old man, and ask my students if it’s a crime. “Yes,” they would cry. “What could be more obvious!” Then I would start stacking contexts, such as a surrounding mob of other men stabbing one another, then a giant arena filled with screaming spectators watching it all, and so on.

The Only-game-in-town Effect (or the Invisibility of Ignorance), according to BBT, plays an even more profound role within us than it does between us. Conscious experience and cognition as we intuit them, it argues, is profoundly structured ‘by’ unk-unk–or informatic neglect.

This is all just to say that the skyhook of design always fills the screen, so to speak, that it always strikes us as sufficient, and can only be revealed as parochial through the accumulation of recalcitrant information. And this makes plain the astonishing nature of Darwin’s achievement, just how far he had to step out of the traditional conceptual box to grasp the importance of natural selection. At the same time, it also explains why, at least for some, the crane was in the ‘air,’ so to speak, why Darwin ultimately found himself in a race with Wallace. The life sciences, by the middle of the 19th century, had accumulated enough ‘recalcitrant information’ to reveal something of the heuristic parochialism of intuitive design and its inapplicability to the life sciences as a matter of fact, as opposed to mere philosophical reflection a la, for instance, Hume.

Intuitive design is a native cognitive heuristic that generates ‘sufficient understanding’ via the systematic neglect of ‘bottom-up’ causal information. The apparent ‘sufficiency’ of this understanding, however, is simply an artifact of this self-same neglect: as is the case with other intentional concepts, it is notoriously difficult to ‘get behind’ this understanding, to explain why it should count as cognition at all. To take Dennett’s example of finding a watch in the forest: certainly understanding that a watch is an intentional artifact, the product of design, tells you something very important, something that allows you to distinguish watches from rocks, for instance. It also tells you to be wary, that other agents such as yourself are about, perhaps looking for that watch. Watch out!

But what, exactly, is it you are understanding? Design seems to possess a profound ‘resolution’ constraint: unlike mechanism, which allows explanations at varying levels of functional complexity, organelles to cells, cells to tissues, tissues to organs, organs to animal organisms, etc., design seems stuck at the level of the ‘personal,’ you might say. Thus the appropriateness of the metaphor: skyhooks leave us hanging in a way that cranes do not.

And thus the importance of cranes. Precisely because of its variable degrees of resolution, you might say, mechanistic understanding allows us to ‘get behind’ our environments, not only to understand them ‘deeper,’ but to hack and reprogram them as well. And this is the sense in which cranes trump skyhooks, why it pays to see the latter as perspectival distortions of the former. Design, as it is intuitively understood, is a skyhook, which is to say, a cognitive illusion.

And here we can clearly see how the threat of tendentiousness hangs over Dennett’s apologetic redefinitional project. The design heuristic is effective precisely because it systematically neglects causal information. It allows us to understand what systems are doing and will do without understanding how they actually work. In other words, what makes design so computationally effective across a narrow scope of applications, causal neglect, seems to be the very thing that fools us into thinking it’s a skyhook–causal neglect.

Looked at in this way, it suddenly becomes very difficult to parse what it is Dennett is recommending. Replacing the old, intuitive, skyhook design-concept with a new, counterintuitive, crane design-concept means using a heuristic whose efficiencies turn on causal neglect in a manner amenable to causal explanation. Now it seems easy, I suppose, to say he’s simply drawing a distinction between informatic neglect as a virtue and informatic neglect as a vice, but can this be so? When an evolutionary psychologist says, ‘We are designed for persistence hunting,’ are we cognizing ‘designed for’ in a causal sense? If so, then what’s the bloody point of hanging onto concept at all? Or are we cognizing ‘designed for’ in an intentional sense? If so, then aren’t we simply wrong? Or are we, as seems far more likely the case, cognizing ‘designed for’ in an intentional sense only ‘as if’ or ‘under erasure,’ which is to say, as a mere facon de parler?

Either way, the prospects for Dennett’s apologetic project, at least in the case of design, seem to look exceedingly bleak. The fact that design cannot be the skyhook it seems to be, that it is actually a crane, does nothing to change the fact that it leverages computational efficiencies via causal neglect, which is to say, by looking at the world through skyhook glasses. The theory behinds his cranes is impeccable. The very notion of crane-design as a deployable concept, however,is incoherent. And using concepts ‘under erasure,’ as one must do when using ‘design’ in evolutionary contexts, would seem to stand upon the very lip of an eliminativist abyss.

And this is simply an instance of what I’ve been ranting about all along here on Three Pound Brain, the calamitous disjunction of knowledge and experience, and the kinds of distortions it is even now imposing on culture and society. The Semantic Apocalypse.

.

But Dennett is interested in far more than simply providing a new Darwinian understanding of design, he wants to mint a new crane-coinage for all intentional concepts. So the question becomes: To what extent do the considerations above apply to intentionality as a whole? What if it were the case that all the peculiarities, the interminable debates, the inability to ‘get behind’ intentionality in any remotely convincing way–what if all this were more than simply coincidental? Insofar as all intentional concepts systematically neglect causal information, we have ample reason to worry. Like it or not, all intentional concepts are heuristic, not in any old manner, but in the very manner characteristic of design.

Brentano, not surprisingly, provides the classic account of the problem in Psychology From an Empirical Standpoint, some fifteen years after the publication of Origin of the Species:

Every mental phenomenon includes something as object within itself, although they do not all do so in the same way. In presentation something is presented, in judgement something is affirmed or denied, in love loved, in hate hated, in desire desired and so on. This intentional in-existence is characteristic exclusively of mental phenomena. No physical phenomenon exhibits anything like it. We can, therefore, define mental phenomena by saying that they are those phenomena which contain an object intentionally within themselves. 68

No physical phenomena exhibits intentionality, and likewise, no intentional phenomena exhibits anything like causality, at least not obviously so. The reason for this, on the BBT account, is as clear as can be. Most are inclined to blame the computational intractability of cognizing and tracking the causal complexities of our relationships. The idea (and it is a beguiling one) is that aboutness is a kind of evolved discovery, that the exigencies of natural selection cobbled together a brain capable of exploiting a preexisting logical space–what we call the ‘a priori.’ Meaning, or intentionality more generally, on this account is literally ‘found money.’ The vexing question, as always, is one of divining how this logical level is related to the causal.

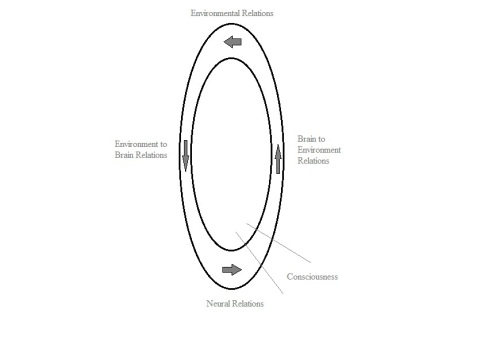

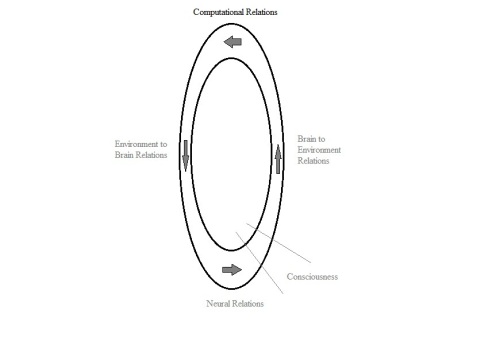

On the BBT account, the computational intractability of cognizing and tracking the causal complexities of our environmental relationships is also to blame, but aboutness, far from being found money, is rather a kind of ‘frame heuristic,’ a way for the brain to relate itself to its environments absent causal information pertaining to this relation. It presumes that consciousness is a distributed, dynamic artifact of some subsystem of the brain and that, as such, faces severe constraints on its access to information generally, and almost no access to information regarding its own neurofunctionality whatsoever:

It presumes, in other words, that the information available for deliberative or conscious cognition must be, for developmental as well as structural reasons, drastically attenuated. And it’s easy to see how this simply has to be the case, simply given the dramatic granularity of consciousness compared to the boggling complexities of our peta-flopping brains.

It presumes, in other words, that the information available for deliberative or conscious cognition must be, for developmental as well as structural reasons, drastically attenuated. And it’s easy to see how this simply has to be the case, simply given the dramatic granularity of consciousness compared to the boggling complexities of our peta-flopping brains.

The real question–the million dollar question, you might say–turns on the character of this informatic attenuation. At the subpersonal level, ‘pondering the mental’ consists (we like to suppose anyway) in the recursive uptake of ‘information regarding the mental’ by ‘System 2,’ or conscious, deliberative cognition. The question immediately becomes: 1) Is this information adequate for cognition? and 2) Are the heuristic systems employed even applicable to this kind of problem, namely, the ‘problem of the mental’? Is the information (as Dennett seems to assume throughout his corpus) ‘merely compressed,’ which is to say, merely stripped to its essentials to maximize computational efficiencies? Or is it a far, far messier affair? Given that the human cognitive ‘toolkit,’ as they call it in ecological rationality circles, is heuristically adapted to troubleshoot external environments, can we assume that mental phenomena actually lie within their scope of application? Could the famed and hoary conundrums afflicting philosophy of mind and consciousness research be symptoms of heuristic overreach, the application of specialized cognitive tools to a problem set they are simply not adapted to solve?

Let’s call the issue expressed in this nest of questions the ‘Attenuation Problem.’

It’s worth noting at this juncture that although Dennett is entirely on board with the notion that ‘the information available for deliberative or conscious cognition must be drastically attenuated’ (see, for instance, “Real Patterns”), he inexplicably shies from any detailed consideration of the nature of this attenuation. Well, perhaps not so inexplicably. For Dennett, the Good Tricks are good because they are efficacious and because they are winners of the evolutionary sweepstakes. He assumes, in other words, that the Attenuation Problem is no problem at all, simply because it has been resolved in advance. Thus, his apologetic, redefinitional programme. Thus his endless attempts to disabuse his fellow travellers of the perceived need to make skyhooks real:

I know that others find this vision so shocking that they turn with renewed eagerness to the conviction that somewhere, somehow, there just has to be a blockade against Darwinism and AI. I have tried to show that Darwin’s dangerous idea carries the implication that there is no such blockade. It follows from the truth of Darwinism that you and I are Mother Nature’s artefacts, but our intentionality is none the less real for being an effect of millions of years of mindless, algorithmic R and D instead of a gift from on high. Darwin’s Dangerous Idea, 426-7

Cranes are all we have, he argues, and as it turns out, they are more than good enough.

But, as we’ve seen in the case of design, the crane version forces us to check our heuristic intuitions at the door. Given that the naturalization of design requires adducing the very causal information that intuitive design neglects to leverage heuristic efficiencies, there cannot be, in effect, any coherent, naturalized concept of design, as opposed to the employment of intuitive design ‘under erasure.’ Real or not, the skyhook comes first, leaving us to append the rest of the crane as an afterthought. Apologetic redefinition is simply not enough.

And this suggests that something might be wrong with Dennett’s arguments from efficacy and evolution for the ‘good enough’ status of derived intentionality. As it turns out, this is precisely the case. Despite their prima facie appeal, neither the apparent efficacy nor the evolutionary pedigree of our intentional concepts provide Dennett with what he needs.

To see how this could be the case, we need to reconsider the two conceptual dividends of BBT considered above, sufficiency and neglect. Since more information is required to flag the insufficiency of the information (be it ‘sensory’ or ‘cognitive’) broadcast through or integrated into consciousness, sufficiency is the perennial default. This is the experiential version of what I called the ‘Only-game-in-town Effect’ above. This means that insufficiency will generally have to be inferred against the grain of a prior sense of intuitive sufficiency. Thus, one might suppose, evolution’s continued difficulties with intuitive design, and science’s battle against anthropomorphic worldviews more generally: not only does science force us to reason around elements of our own cognitive apparatus, it forces us to overcome the intuition that these elements are good enough to tell us what’s what on their own.

Dennett, in this instance at least, is arguing with the intuitive grain!

Intentionality, once again, systematically neglects causal information. As Chalmers puts it, echoing Leibniz and his problem of the Mill:

The basic problem has already been mentioned. First: Physical descriptions of the world characterize the world in terms of structure and dynamics. Second: From truths about structure and dynamics, one can deduce only further truths about structure and dynamics. And third: truths about consciousness are not truths about structure and dynamics. “Consciousness and Its Place in Nature”

Informatic neglect simply means that conscious experience tells us nothing about the neurofunctional details of conscious experience. Rather, we seem to find ourselves stranded with an eerily empty version of what the life sciences tell us we in fact are, the asymptotic (finite but unbounded) clearing called ‘consciousness’ or ‘mind’ containing, as Brentano puts it, ‘objects within itself.’ What is a mere fractional slice of the neuro-environmental circuit sketched above, literally fills the screen of conscious experience, as it were, appearing something like this:

Which is to say, something like a first-person perspective, where environmental relations appear within a ‘transparent frame’ of experience. Thus all the blank white space around the arrow: I wanted to convey the strange sense in which you are the ‘occluded frame,’ here, a background where the brain drops out, not just partially, not even entirely, but utterly. Floridi refers to this as the ‘one-dimensionality of experience,’ the way “experience is experience, only experience, and nothing but experience” (The Philosophy of Information, 296). Experience utterly fills the screen, relegating the mechanisms that make it possible to oblivion. As I’ve quipped many times: Consciousness is a fragment that constitutively confuses itself for a whole, a cog systematically deluded into thinking it’s the entire machine. Sufficiency and neglect, taken together, mean we really have no way short of a mature neuroscience of determining the character of the informatic attenuation (how compressed, depleted, fragmentary, distorted, etc.) of intentional phenomena.

So consider the evolutionary argument, the contention that evolution assures us that intentional attenuations are generally happy adaptations: Why else would they be selected otherwise?

To this, we need only reply, Sure, but adapted for what? Say subreption was the best way for evolution to proceed: We have sex because we lust, not because we want to replicate our genetic information, generally speaking. We pair-bond because we love, not because we want to successfully raise offspring to the age of sexual maturation, generally speaking. When it comes to evolution, we find more than a few ‘ulterior motives.’ One need only consider the kinds of evolutionary debates you find in cognitive psychology, for instance, to realize that our intuitive sense of our myriad capacities need not line up with their adaptative functions in any way at all, let alone those we might consider ‘happy.’

Or say evolution was only concerned with providing what might be called ‘exigency information’ for deliberative cognition, the barest details required for a limited subset of cognitive activities. One could cobble together a kind of neuro-Wittgensteinian argument, suggest that we do what we do all well and fine, but that as soon as we pause to theorize what we do, we find ourselves limited to mere informatic rumour and innuendo that, thanks to sufficiency, we promptly confuse for apodictic knowledge. It literally could be the case that what we call philosophy amounts to little more than submitting the same ‘mangled’ information to various deliberative systems again and again and again, hoping against hope for a different result. In fact, you could argue that this is precisely what we should expect to be the case, given that we almost certainly didn’t evolve to ‘philosophize.’

In other words, how does Dennett know the ‘intentionality’ he and others are ‘making explicit’ accurately describes the mechanisms, the Good Tricks, that evolution actually selected? He doesn’t. He can’t.

But if the evolutionary argument holds no water, what about Dennett’s argument regarding the out-and-out efficacy of intentional concepts? Unlike the evolutionary complaint, this argument is, I think, genuinely powerful. After all, we seem to use intentional concepts to understand, predict, and manipulate each other all the time. And perhaps even more impressively, we use them (albeit in stripped down form)in formal semantics and all its astounding applications. Fodor, for instance, famously argues that the use of representations in computation provide an all-important ‘compatibility proof.’ Formalization links semantics to syntax, and computation links syntax to causation. It’s hard to imagine a better demonstration of the way skyhooks could be linked to cranes.

Except that, like fitting the belly of Africa into the gut of the Carribean, it never quite seems to work when you actually try. Thus Searle’s famous Chinese Room Argument and Harnad’s generalization of it into the Symbol Grounding Problem. But the intuition persists that it has to work somehow: After all, what else could account for all that efficacy?

Plenty, it turns out. Intentional concepts, no matter how attenuated, will be efficacious the degree to which the brain is efficacious, simply by virtue of being systematically related to the activity of the brain. The upshot of sufficiency and neglect, recall, is that we are prone to confuse what little information we have available for most all the information available. The greater neuro-environmental circuit revealed by third-person science simply does not exist for the first-person, not even as an absence. This generates the problem of metonymicry, or the tendency for consciousness to take credit for the whole cognitive show regardless of what actually happens neurocomputationally back stage. Now matter how mangled our metacognitive understanding, how insufficient the information broadcast or integrated, in the absence of contradicting information, it will count as our intuitive baseline for what works. It will seem to be the very rule.

And this, my view predicts, is what science will eventually make of the ‘a priori.’ It will show it to be of a piece with the soul, which is to say, more superstition, a cognitive illusion generated by sufficiency and informatic neglect. As a neural subsystem, the conscious brain has more than just the environment from which to learn; it also has the brain itself. Perhaps logic and mathematics as we intuitively conceive them are best thought of, from the life sciences perspective at least (that is, the perspective you hope will keep you alive every time you see your doctor), as kinds of depleted, truncated, informatic shadows cast by brains performatively exploring the most basic natural permutations of information processing, the combinatorial ensemble of nature’s most fundamental, hyper-applicable, interaction patterns.

On this view, ‘computer programming,’ for instance, looks something like:

where essentially, you have two machines conjoined, two ‘implementations’ with semantics arising as an artifact of the varieties of informatic neglect characterizing the position of the conscious subsystem on this circuit. On this account, our brains ‘program’ the computer, and our conscious subsystems, though they do participate, do so under a number of onerous informatic constraints. As a result, we program blind to all aetiologies save the ‘lateral,’ which is to say, those functionally independent mechanisms belonging to the computer and to our immediate environment more generally. In place of any thoroughgoing access to these ‘medial’ (functionally dependent) causal relations, conscious cognition is forced to rely what little information it can glean, which is to say, the cartoon skyhooks we call semantics. Since this information is systematically related to what the brain is actually doing, and since informatic neglect renders it apparently sufficient, conscious cognition decides it’s the outboard engine driving the whole bloody boat. Neural interaction patterns author inference schemes that, thanks to sufficiency and neglect, conscious cognition deems the efficacious author of computer interaction patterns.

where essentially, you have two machines conjoined, two ‘implementations’ with semantics arising as an artifact of the varieties of informatic neglect characterizing the position of the conscious subsystem on this circuit. On this account, our brains ‘program’ the computer, and our conscious subsystems, though they do participate, do so under a number of onerous informatic constraints. As a result, we program blind to all aetiologies save the ‘lateral,’ which is to say, those functionally independent mechanisms belonging to the computer and to our immediate environment more generally. In place of any thoroughgoing access to these ‘medial’ (functionally dependent) causal relations, conscious cognition is forced to rely what little information it can glean, which is to say, the cartoon skyhooks we call semantics. Since this information is systematically related to what the brain is actually doing, and since informatic neglect renders it apparently sufficient, conscious cognition decides it’s the outboard engine driving the whole bloody boat. Neural interaction patterns author inference schemes that, thanks to sufficiency and neglect, conscious cognition deems the efficacious author of computer interaction patterns.

Semantics, in other words, can be explained away.

The very real problem of metonymicry allows us to see how Dennett’s famous ‘two black boxes’ thought-experiment (Darwin’s Dangerous Idea, 412-27), far from dramatically demonstrating the efficacy of intentionality, is simply an extended exercise in question-begging. Dennett tells the story of a group of researchers stranded with two black boxes, each containing a supercomputer containing a database of ‘true facts’ about the world only in different programming languages. One box has two buttons labelled alpha and beta, while the second box has three lights coloured yellow, red, and green accordingly. A single wire connects them. Unbeknownst to the researchers, the button box simply transmits a true statement when the alpha button is pushed, which the bulb box acknowledges by lighting the red bulb for agreement, and a false statement when the beta button is pushed, which the bulb box acknowledges by lighting the green bulb for disagreement. The yellow bulb illuminates only when the bulb box can make no sense of the transmission, which is always the case when the researcher disconnect the boxes and, being entirely ignorant of any of these details, substitute signals of their own.

What Dennett wants to show is how these box-to-box interactions would be impossible to decipher short of taking the intentional stance, in which case, as he points out, the communications become easy enough for a child to comprehend. But all he’s really saying is that the coded transmissions between our brains only make sense from the standpoint of our environmentally informed brains–that the communications between them are adapted to their idiosyncrasies as environmentally embedded, supercomplicated systems. He thinks he’s arguing the ineliminability of intentionality as we intuitively conceive it, as if it were the one wheel required to make the entire mechanism turn. But again, the spectre of metonymicry, the fact that, no matter where our intentional intuitions fit on the neurofunctional food chain, they will strike us as central and efficacious even when they are not, means that all this thought experiment shows–all that it can show, in fact–is that our brains communicate in idiosyncratic codes that conscious cognition seems access via intentional intuitions. To assume that our assumptions regarding the ‘intentional’ capture that code without gross, even debilitating distortions, simply begs the question.

The question we want answered is how intentionality as we understand it is related to the efficacy of our brains. We want to know how conscious experience and cognition fits into this far more sophisticated mechanistic picture. Another way of putting this, since it amounts to the same thing, is that we want to know whether it makes any sense doing philosophy as we have traditionally conceived it. How far we can trust our native intuitions regarding intentionality? The irony, of course, is that Dennett himself argues no, at least to the extent that skyhooks are both intuitive and illusory. Efficacy, understood via design, is ‘top-down,’ the artifact of agency, which is to say, another skyhook. The whole point of introducing the metaphor of cranes was to find some way of capturing our ‘skyhookish’ intuitions in a manner amenable to Darwinian evolution. And, as we saw in the case of design, above, this inexorably means using the concept ‘under erasure.’

The way cognitive science may force us to use all intentional concepts.

.

Consciousness, whatever it turns out to be, is informatically localized. We are just beginning the hard work of inventorying all the information, constitutive or otherwise, that slips through its meagre nets. Because it is localized, it lacks access to vast amounts of information regarding its locality. This means that it is locally conditioned in such a way that it assumes itself locally unconditioned–to be a skyhook as opposed to a crane.

A skyhook, of course, that happens to look something like this

A skyhook, of course, that happens to look something like this

which is to say, what you are undergoing this very moment, reading these very words. On the BBT account, the shape of the first-person is cut from the third-person with the scissors of neglect. The best way to understand consciousness as we humans seem to generally conceive it, to unravel the knots of perplexity that seem to belong to it, is to conceive it in privative terms, as the result of numerous informatic subtractions.* Since those subtractions are a matter of neglect from the standpoint of conscious experience and cognition, they in no way exist for conscious experience and cognition, which means their character utterly escapes our ability to cognize, short of the information accumulating in the cognitive sciences. Experience provides us with innumerable assumptions regarding what we are and what we do, intuitions stamped in various cultural moulds, all conforming to the metaphorics of the skyhook. Dennett’s cranes are simply attempts to intellectually plug these skyhooks into the meat that makes them possible, allowing him to thus argue that intentionality is real enough.

which is to say, what you are undergoing this very moment, reading these very words. On the BBT account, the shape of the first-person is cut from the third-person with the scissors of neglect. The best way to understand consciousness as we humans seem to generally conceive it, to unravel the knots of perplexity that seem to belong to it, is to conceive it in privative terms, as the result of numerous informatic subtractions.* Since those subtractions are a matter of neglect from the standpoint of conscious experience and cognition, they in no way exist for conscious experience and cognition, which means their character utterly escapes our ability to cognize, short of the information accumulating in the cognitive sciences. Experience provides us with innumerable assumptions regarding what we are and what we do, intuitions stamped in various cultural moulds, all conforming to the metaphorics of the skyhook. Dennett’s cranes are simply attempts to intellectually plug these skyhooks into the meat that makes them possible, allowing him to thus argue that intentionality is real enough.

Metonymicry shows that the crane metaphor not only fails to do the conceptual heavy lifting that Dennett’s apologetic redefinitional strategy demands, it also fails to capture the ‘position,’ if you will, of our intentional skyhooks relative to the neglected causality that makes them possible. Cranes may be ‘grounded,’ but they still have hooks: this is why the metaphor is so suggestive. Mundane cranes may be, but they can still do the work that skyhooks accomplish via magic. The presumption that intentional concepts do the work we think that they do is, you could say, built right into the metaphoric frame of Dennett’s argument. But the problem is that skyhooks are not ‘cranes,’ rather they are cogs, mechanistic moments in a larger mechanism, rising from neglected processes to discharge neglected functions. They hang in the meat, and the question of where they hang, and the degree to which their functional position matches or approximates their intuitive one remains a profoundly open and entirely empirical question.

Thus, the pessimistic, quasi-eliminativist thrust of BBT: once metonymicry decouples intentionality from neural efficacy, it seems clear there are far more ways for our metacognitive intuitions to be deceived than otherwise.

Either way, the upshot is that efficacy, like evolution, guarantees nothing when it comes to intentionality. It really could be the case that we are simply ‘pre-Darwinian’ with reference to intentionality in a manner resembling the various commitments to design held back in Darwin’s day. Representation could very well suffer the same fate vis a vis the life sciences–it literally could become a concept that we can only use ‘under erasure’ when speaking of human cognition.

Science overcoming the black box of the brain could be likened to a gang of starving thieves breaking into a treasure room they had obsessively pondered for the entirety of their unsavoury careers. They range about, kicking over cases filled with paper, fretting over the fact that they can’t find any gold, anything possessing intrinsic value. Dennett is the one who examines the paper, and holds it up declaring that it’s cash and so capable of providing all the wealth anyone could ever need.

I’m the one-eyed syphilitic, the runt of the evil litter, who points out Jefferson Davis staring up from each and every $50 bill.

.

Notes

* It’s worth pausing to consider the way BBT ‘pictures’ consciousness. First, BBT is agnostic on the issue of how the brain generates consciousness; it is concerned, rather, with the way consciousness appears. Taking a deflationary, ‘working conception’ of nonsemantic information, and assuming three things–that consciousness involves integration of differentiated elements, that it has no way of cognizing information related to its own neurofunctionality, and that it is a subsystematic artifact of the brain–it sees the first-person and all its perplexities as expressions of informatic neglect. Consider the asymptotic margins of visual attention–the way the limits of what you are seeing this very moment cannot themselves be seen. BBT argues that similar asymptotic margins, or ‘information horizons,’ characterize all the modalities of conscious experience–as they must, insofar as the information available to each is finite. The radical step in the picture is see how this trivial fact can explain the apparent structure of the first-person as an asymptotic partitioning of a larger informatic environment. So it suggests that a first-person structural feature as significant and as perplexing as the Now, for instance, can be viewed as a kind of temporal analogue of our visual margin, always apparently the ‘same’ because timing can no more time itself than seeing can see itself, and always different because other cognitive systems (as in the case of vision again) can frame it as another moment within a larger (in this case temporal) environment. Most of the problems pertaining to consciousness, the paradoxicality, the incommensurability, the inexplicability, can be explained if we simply adopt a subsystematic perspective, and start asking what information we could realistically expect to be available for uptake for what kinds of cognitive systems. Thus the radical empirical stakes of BBT: the ‘consciousness’ that remains seems far, far easier to explain than the conundrum riddled one we think we see.

After reading through the whole of the post all I can say is that I need tor read more.

great but i want unholy consult!!

“Dennett, in this instance at least, is arguing with the intuitive grain!”

I do not think that Dennett assumes that intuition is capable of going beyond it’s inherent boundaries. He uses what has been discovered by neuroscience as a foundation for his explanations. In addition he takes as a given that natural selection selects for properties and mechanism that are efficacious to survival. Therefore, intuitive attenuations are seen to be efficacious in terms of survival even if, or more precisely because, they do not include information pertaining to their sources in neural activity.

Yes. I say this explicitly earlier in the piece.

Then I am missing something (again). Do you disagree with the position that intuitive attenuations are efficacious to survival?

Terry, is that question one that lends credibility to how the intuition feels, when experienced first hand?

It sort of blends how an intiution feels to expeience, with how mechanically it might pertain to survival. They are not necessarily the same thing.

Callan, no I wasn’t talking about the experience of an intuitive attenuation. Dennet says that brain functioning that results in intuitive attenuations is of survival advantage. Otherwise the brain would not have evolved in such a way as to function in this way.

I don’t understand then – if these intuitive attenuations, for the time being, do not get the specimen killed, then they are efficacious to survival. For now.

It might be reading the question wrong, but is it asking if these intuitive attenuations have just won a position in survial and get to keep it forever?

Strictly speaking intuitive attenuations are not efficacious to survival. They just have been so far (and that’s ignoring how much we seem to kill each other and just focusing on how were a plague species right now rather than endangered).

Technically it’d be correct to disagree with the idea that intuitive attenuations are efficacious to survival.

I think we may have different understandings of what “intentional attenuations” are. I could be wrong but I’m interpreting them as the contents of consciousness (thoughts, images, etc.).

That’s what I’m refering to as well, Terry 🙂

Here is why I say intentional attenuations are efficacious to survival.

What matters to brains is the quality of experiences that they have (because they believe themselves to be experiencing intentional agents). That all of its experiencing is actually in aid of the survival of the species is irrelevant to an experiencing brain. Such knowledge has no survival value and has therefore been ‘left out’ of conscious experiencing by natural selection. Experience doesn’t just neglect its origins it is incapable of knowing them.

If fact, if human brains were to experience their own workings (be directly cognizant of their own neural activities) they would experience nothing but meaninglessness.

Experiences possess meaning only by virtue of consisting of seemingly independent phenomena that (because they seem independent) are capable of being in relationships with each other. Meaning requires relationships. In order for meaning to exist it is required that experiences not include direct experiencing of the neural activity giving rise to them. The experience of being an independent entity in a world of independent but inter-related entities exists (has been selected for) because it is tremendously advantageous in terms of the survival of our species. Meaning exists because it allows brains to care about their own survival. Brains experience the pursuit of various aspects of the survival drive as the pursuit of happiness. In pursuing happiness brains do the work of maintaining the survival of the species. It’s a win-win scenario, individual brains experience meaning through the pursuit of happiness and the human species thrives as a consequence.

Meaning matters in the most fundamental way (it serves survival). Because of this evolution has seen to it that meaning is unavoidable. You can explain it but you can’t explain it away (get rid of it). The Semantic Apocalypse is a non-starter.

But you speak as if you’ve won the survival race permanently. As if it’s not just the current state of things, with things being able to change.

And if circumstances change that your meaning is actually a liability, what then?

You’ve according meaning some special status in evolution, when tomorrow it may be just old news, a forgotten fashion, an old fad. Fate is a whore and evolution a fickle bitch.

Yes, it got us this far (lets ignore the wars/murder and rape for the moment) and I think we should look at our sense of meaning (because this meaning we refer to is another intentional attenuation) and see it as having a strong pedegree, a strong survival history. Unless it’s been detrimental the whole time – but then we’d have gone extinct you’d think. Though scientists have suggested we were down to only 2K individuals at one point in the ancient past. Anyway, I throw around qualifications – we can see it has a strong past. But that doesn’t mean meaning has won the evolutionary lottery.

Technically it’d be correct to disagree with the idea that intuitive attenuations are efficacious to survival.

As it implies they always will be. Which is not necessarily true.

Thus enabling the bite of semantic apocalypse.

Not that we haven’t seemingly butchered each other (by simply declaring others and not ‘each other’) in the past en masse and we seem to do that a bit less these days. Here’s to that reducing trend remaining a trend!

“Technically it’d be correct to disagree with the idea that intuitive attenuations are efficacious to survival. As it implies they always will be. Which is not necessarily true.”

Well, of course I was not saying such would be the case for all eternity, just that as things stand now meaning is hardwired into the structure of our brains. For this hard wiring to not be relevant to survival would take a monumental environmental shift. Baring an environmental ‘apocalypse’ such a change would take millions of years and brains would either adapt or we would become extinct. I don’t think this is what Scott means by the Semantic Apocalypse. It seems to me that he is referring to the SA as a consequence of accepting our situation as described by BBT. It’s this understanding of the SA that I am saying is a non-starter.

a monumental environmental shift.

What environment? Do you hunt and gather, or grow your own food on a hill somewhere?

You don’t live in the environment – the natural environment. You live in a man made infrastructure. That can change pretty much on a dime. And you can’t escape that structure, because it takes about four months to produce a food crop outside of that infrastructure (and that’s if you get lucky).

Atleast you acknowledge the capacity for an enviromental change (if only looking at the natural environment) and the potential for extinction because of it. We simply differ on the time scales involved.

Also I might pay if it’s just common folks consequences of accepting (assuming it’s all that possible) BBT, it might just be a non starter*.

* Though the start of alot of strange fiction! That I wont accept any argument on! 😉

Can you describe an environment where meaning would not have survival value? As I explained in an earlier post meaning is a consequence of intuitive attenuations.

“Also I might pay if it’s just common folks consequences of accepting (assuming it’s all that possible) BBT, it might just be a non starter*

I’m not sure what you mean here.

Probably an environment that’s beyond good and evil. I’d say Kellhus was playing outside the scope of meaning – how far it extends. Atleast human meaning. If meaning seems endless, without border – well, it looks that way from within, but I’m not sure. Even before he went mad, I doubt I could keep up emotionally/meaningfully with Kellhus. Moreso after the madness. And he was just one.

I’m not sure what you mean here.

Well, I look at the way Mc Donalds advertises at children and I think various corporations would, particularly in an evironment where competitors are doing it, use the knowledge to manipulate. Hey, it seems a plausible theory of Scott’s – it’d be great if it turned out to be wrong (though alot of unnessersary stress, I’ll grant). But if I ignore the corporations and just look at more regular folk, I think I might pay your point to some degree.

I can go to the store and buy a head of lettuce, tomatoes, cucumber, carrots etc. for about $12 dollars and eat salad of a week but its more convenient to spend $4 on “salad in a bag”, or the convenience of salad for one day serves me and saves the work of preparing salad for an entire week which I may not want afterall or does not serve my immediate needs.

I lookout the backyard window and see the tree in my yard and recall its the same tree I see everyday I look out, however nature in all of its complex fractal geometry does not allow me to remember each individual leaf on that tree unless one leaf had turned brown while the rest remained green. I have no trouble seeing the back of the neighbor’s house and the rear window composed of four smaller rectangular casement windows and remember it as the same house I’ve seen for 26 years. We may live in a conscious fractal forest but our world is a reduced system of the temple of reason built out of straight line columns, solid foundations and supported rooves. We have decomposed the world which is created on the natural system of gravity into our own parthenon world of buildings and reason or we have decomposed natural intentionality into systems of thought. Heck look at what we did with our natural sex drive and the world of morality. Great grandma was betrothed in marriage at fourteen but when sixteen year old teens are caught with the boyfriend in the back of the car they are branded “sluts”.

So what would the skyhook be without the crane? And of course no such things exist….

Does that just skim the cream off the top – ignoring the wars for oil, for example, to ensure that food is shipped to a convenient location for you? And what is the cognition behind those wars?

When you get a ground up infrastructure that keeps you alive yet doesn’t rely on wars and butchery to sustain it, then you’ve more room to say ‘so what if I don’t notice every leaf on the tree?’. I’m reminded of the colateral violence video, where a telephoto lens is taken to be a RPG.

Interesting stream. If you follow the Gen. Petraueus scandal, with his Harvard trained mistress and Tampa socialites, the military is a top down society of wealthy supporters and General, Admirals etc. that wine and dine while the troops move around in modified commercial Hum-Vee’s with no special RPG repelling armament because the DOD contracting office already overpaid for the camoflauge paint job.

Yeah, but how much does your own food supply rely on their deployment?

It’s easy enough to say we get on well enough not seeing all the leaves. And this is one of the leaves.

To a certain extent I guess you could say that the original Paley watch design heuristic respects the causal chain better than the “as if” conceptualization that scientists routinely employ. “Studies show” that scientists usually think teleologically. The problem is that religious teleological thinkers “ground” the causal chain in God, who serves as a sort of philosophical singularity. There is no need to reach beyond the God event horizon since He is incomprehensible anyway. For them, design is more than a cognitive heuristic. It becomes a real explanation; I guess you could even call it scientific (with the rather massive assumption at its center). Scientist do think and discourse intentionally and teleologically and anthropomorphically; the giraffe neck is long “in order for it to” reach leaves; termites “have considered” air circulation in their mounds, and so on. It’s of such second nature that I doubt many of them consider its inaccuracy (well, yes, probably anthropomorphism), and I’m actually not so sure some of it is all that inaccurate. The giraffe neck really is long “in order for it to” reach leaves. It’s just that behind that intuition, there lies the mechanism of selection and adaptation, usefully abstracted as anatomical design purpose.

“in order to” and “designed to” make me think of a god shackled to Xerius throne, it’s eyes blinded, it’s loins nuetered.

“The problem is that religious teleological thinkers “ground” the causal chain in God, who serves as a sort of philosophical singularity. There is no need to reach beyond the God event horizon since He is incomprehensible anyway.”

BS. You can get there if your sole experience with Christianity is TV evangelists, but they are hardly the rank and file of Christianity. Those are the money grubbers abusing Christianity to make themselves rich. The common church preacher (the good ones anyway) teach from their own experience, like any garden variety self-help guru that tries to get you out of self-esteem and other issues. The Bible is a reference, not a Rulebook. You’re talking as if every theologian believes in God as Magician, rather than Creator. The Christian perspective on science and what it has provided is a broad spectrum of beliefs. Sure, the faith-healing quacks make the news, but they are often cultish and rare. Your typical Christian goes to the doctor and gets meds. It’s interesting that it’s the non-Christian athiests that more often get trapped in the Alternative Medicine trap.

Conservative Christianity spends as much time puzzling and thinking over the Bible in Bible Study as you do reading Philosophy (maybe more at once or twice per week). They THINK about it, trying to figure out what is Good and what God wants them to do. The Bible, itself, is a book subject to considerable philosophical analysis. Just take a Look at Acts and notice that the concept of “Collective” is 2000 years old.

Christianity doesn’t worry much about how we think, true, but how important is it to the daily lives of regular folks, anyway?

Try thinking about things without the bible, without god.

I’m sure you’re physically capable of it. The other question might be whether it’s heretical to do so. A sin.

You can think Cornucopia is refering to TV evangelists as much as you want, but he’s talking about the utter resistance to thinking without bible, thinking without god. He is talking about you. But you think he could only be talking about someone unreasonable, like the evangelists. What’s more reasonable than thinking about everything in terms of the bible, twice per week?

So you appear to yourself quite open minded on the matter – yet thinking about things without bible, without god is a no-go. If we ignore the idea it’s bad in some way, then some sot of barrier has been projected unto your thinking by something else in your mind, unseen. But you treat the barrier as no more than what is part of reasonable thinking, you treat it no differently than the streets you walk down – the street goes that way, what’s more reasonable to do than to go that way? And so do I, with various unknown barriers to my own reasoning.

I’m glad your writing makes my head explode only in the facon de parler sense.

It’s ironic, Scott. Let’s say you do figure out how we think. What is the most obvious result of that?

Anything we have figured out we have modelled in a computer. The first result of uncovering the secrets of consciousness is Articial Intelligence: once we know how consciousness exists, we should be able to program it. It’s jsut a matter of turning the method into a mathematical model. I noticed you have a concern about transhumanity. Are you not just as worried about the Frankenstein’s Computer as his monster? Are you not concerned that you are walking Frankenstein’s path?

[…] above all the links that tie it to the ground, or reality, are unperceived. Medial neglect. A skyhook. We don’t have the tools to track those links, and so we end up with a picture that […]