THE Something About Mary

by rsbakker

.

Introduction

“A heuristic,” Peter Todd and Gerd Gigerenzer write, “is a strategy that ignores available information” (Ecological Rationality, 7). Rather than assembling and assessing all possible information, it uses only the information needed to solve those problems it is adapted to solve. Heuristics, in other words, are matched to specific problems. The idea is that humans and animals alike possess (to crib Wray Herbert’s term) ‘heuristic brains,’ that the combination of environmental complexity and the steep metabolic cost of neural information processing forced the development of perceptual and cognitive ‘shortcuts’ at every level of brain function. Far from monolithic and universal, cognition must be seen as an ‘adaptive toolbox,’ a collection of special purpose tools geared to specific environmental challenges. Use the right heuristic in the right circumstance, and problems not only get solved more quickly, they regularly get solved more accurately as well. Use the wrong heuristic in the wrong circumstance, however, and things almost always go awry.

What I want to do is to propose a heterodox reinterpretation of Frank Jackson’s famous Mary the neuroscientist thought-experiment in light of the heuristic brain. What makes this notorious philosophical puzzle so puzzling, I want to argue, is not so different from what makes illusions such as, for instance, the Muller-Lyer effect so puzzling. The Mary thought-experiment tricks us into thinking two different kinds of information regarding red are ontologically incompatible for the same reason the Muller-Lyer effect tricks the brain into seeing lines of identical length as different: both involve heuristics that, although tremendously useful in a great variety of environments, generate puzzles when used in unusual problem-solving contexts. In other words, I want to argue that the Knowledge Argument is an artifact of what Herbert A. Simon called ‘bounded rationality,’ or a peculiar version of it, at least. Given what we know about the human brain, we can make a number of assumptions regarding the kinds of information it can and cannot access. The puzzle generated by the Mary thought experiment, I hope to show, is precisely the kind of puzzle we should expect, given the limited information (relative to cognitive resources) available to the human brain.

.

1) Two Puzzles

Just about anyone who has taken a freshman psychology class has seen the following:

Discovered by the Franz Muller-Lyer in 1889, the illusion reproduced above has long been a staple of perceptual psychology. Even though both lines possess the identical length, for most Westerners the top one situated between the inward pointing arrows (or ‘fins’) appears to be obviously longer than the bottom line situated between the outward pointing arrows. As one of the most studied visual illusions in psychology numerous explanations for the illusion have been proposed. The most cited is the idea that some kind of ‘depth heuristic’ is involved (Thiery 1896, Segall et al. 1963, Gregory 1968, Howe and Purves 2004). The argument is that the visual systems of those raised in ‘carpentered environments’ develop the ability to ‘leap to conclusions’ regarding depth. The (b) line appears shorter than the (a) line because the different fins trick our brain into assuming the (b) line is farther away than the (a) line. Given that visual perception is a probabilistic process adapted to the statistical relationships obtaining between ambiguous retinal images and real world sources–which is to say, a matter of educated guesswork–the atypical presentation of visual cues normally associated with these guesses triggers misapplications, leading to the effect you just witnessed above.

Now what on earth could this possibly have to do with Mary and the Knowledge Argument? Jackson (1982) describes the thought experiment thus:

Mary is a brilliant scientist who is, for whatever reason, forced to investigate the world from a black and white room via a black and white television monitor. She specializes in the neurophysiology of vision and acquires, let us suppose, all the physical information there is to obtain about what goes on when we see ripe tomatoes, or the sky, and use terms like ‘red’, ‘blue’, and so on.… What will happen when Mary is released from her black and white room or is given a color television monitor? Will she learn anything or not? It seems just obvious that she will learn something about the world and our visual experience of it. But then is it inescapable that her previous knowledge was incomplete. But she had all the physical information. Ergo there is more to have than that, and Physicalism is false.

The argument as expressed here is both elegant and straightforward. Mary has all the physical information about colour vision prior to experiencing colour. Experiencing colour, however, provides Mary with additional information about colour vision. Therefore, not all information is physical information.

This thought experiment has been attacked and defended from pretty much every angle imaginable. At this point, however, I’m not so much interested in attacking or defending Mary as I am in considering what she might say about human cognition more generally. For me, the puzzle of the Knowledge Argument is far more interesting as a diagnostic clue. As a happy consequence, I can simply sidestep the literature, noting that no real clear consensus has emerged, despite decades of ingenious bickering back and forth. In fact, the Knowledge Argument has so effectively divided philosophers of mind that David Chalmers uses it to sort various positions in his now canonical bestiary of the field, “Consciousness and Its Place In Nature.”

The apparent insolubility of this puzzle, in other words, counts as evidence for my position. The Knowledge Argument is puzzling insofar as it consistently divides the opinions of educated and otherwise rational individuals. The remainder of my argument, however, consists of a number of empirical claims regarding the nature and limits of human cognition. Not the least of these is the notion that human cognition, as it pertains to the Knowledge Argument, is heuristic, where ‘heuristic’ is defined (in line with Todd and Gigarenzer above) as a mechanistic problem-solving system that, because it is adapted to the specific information structure of various environments, can ignore various other kinds of information.

.

2) Defining Heuristics

Sheldon Chow (2011) convincingly argues that ‘heuristic’ as generally employed in psychology and cognitive science is either conceptually vague and/or empirically trivial. The problem, quite simply, is that the ‘guaranteed correct outcome’ or ‘optimization’ procedures that heuristics are typically (and normatively) defined against apply to only a select few real world problems. As Chow writes, “Since every problem-solving or inferential procedure is heuristic in real-world contexts, there seems to be no point at all in invoking the term ‘heuristic’ to refer to these procedures, for any and all of them just are, simply and plainly, cognitive procedures applied in the real world” (47). Chow subsequently sets out to redefine heuristics in a more conceptually precise and empirically useful manner, ruling out mere instances of stimulus-response, and distinguishing between what he terms computational, perceptual, and cognitive heuristics. Taking the latter as his focus, he then distinguishes between methodological (or ‘logic of discovery’) concerns, and inferential heuristics proper, which he defines as any problem-solving procedure that satisfices (as opposed to optimizes) and economizes by exploiting relevant contextual features.

There is little doubt that Chow’s treatment of heuristics is long overdue. There is, however, one sense in which his charge of triviality is misplaced, particularly with respect to the work being done in ecological rationality. As obvious as it may seem to those working in cognitive science, the claim that cognition, both learned and innate, is ‘heuristic all the way down’ possesses a number of counterintuitive implications, and to this extent at least, it is far from trivial. For one, it flies in the face of our introspective sense of our cognitive capacities. The historical trend in the sciences of the brain is one of implacable fractionalization, the use of experimental information to continually complicate (and problematize) far more granular introspective assumptions. One need only compare the contemporary picture of memory to Plato’s image of memory as an ‘aviary’ in the Thaetaetus, or the intricacies of modern cognition to Aristotle’s definition of nous as “the part of the soul by which it knows and understands” in De Anima (3, 4), to appreciate the radical extent to which the ‘heuristic brain’ contravenes what seem to be intuitive default assumptions regarding the monolithic simplicity of cognitive function.

More importantly (particularly with respect to the Knowledge Argument), introspective blindness to the plural, heuristic constitution of our cognitive capacities possesses a corresponding blindness to the various scopes of applicability belonging to a given heuristic. How can we know whether we’re applying a certain heuristic ‘out of school’ when we have no idea we’re applying a heuristic at all? This underscores the sense in which the ‘heuristic brain,’ far from trivial, possesses dramatic philosophical consequences, not the least of which is the possibility raised here, that the controversy evinced by Jackson’s notorious thought experiment is itself an artifact of heuristic misapplication, a kind of ‘conceptual Muller-Lyer illusion.’ Models of cognition like Todd and Gigerenzer’s adaptive toolbox (1999, 2012) or Gary Marcus’s kluge assembly (2008, 2009) force philosophy to abandon the naive, idealized notions of cognition entailed by traditional notions of ‘Reason’ or ‘Understanding’ or what have you, and consider the specific kinds of tools they might be using, and whether they are, quite simply, the proper tools for the job. The difficulty philosophers face, of course, is that the sciences of the brain have yet to provide anything more than the merest sketch of what those tools and jobs might be. The research program of ecological rationality is just getting underway. We know that memory, learning, and environmental cues somehow drive and/or constrain heuristic ‘selection,’ but little else (Todd and Gigerenzer, 2012, p. 21-22).

The present paper can be read as an early, speculative step in the direction of what I think will be a dramatic, wholesale renovation of our understanding of philosophy and its traditional problematics. Contra Chow, the ubiquity of heuristics as characterized in the context of ecological rationality is the primary source of their significance, at least at this stage in the game. A ‘heuristics all the way down’ approach to cognition will only count as philosophically trivial once its consequences are generally acknowledged and incorporated within the discipline as a whole.

.

3) The Inverse Brain

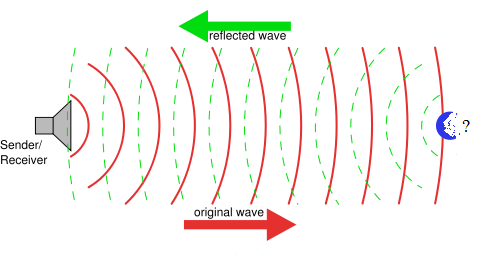

The interpretation of the Muller-Lyer illusion given above poses the depth heuristic as a solution to something called the ‘inverse optics problem.’ In many ways, the information reaching the retina is profoundly ambiguous. The moon, for instance, is approximately 1/400 the size of the sun, but it is also approximately 400 times closer than the sun, such that it appears to be the same size given the visual information we have available. This kind of source ambiguity pertains to all retinal images. Since the same retinal information could have potentially unlimited number of environmental sources, the brain requires some means of accurately incorporating that information in its environmental models. The basic idea behind the depth heuristic interpretation is that the visual system uses various configurations of available visual information (such as the geometrical cues used in the above presentation of the illusion) to assumptively ‘source’ the remaining information, to make probabilistic predictions. This is what makes the problem of retinal ambiguity an inverse problem more generally, which is to say, a problem where information regarding some distal phenomenon must be derived from proximal information that is somehow systematically interrelated with that distal phenomenon. Innumerable technological modes of detection and observation operate on this very principle. Lacking direct access to the object in question, we can only infer its properties either passively from the properties of preexisting signals (as in the case of reflected light) or actively from the properties of signals we ourselves transmit (as in the case of reflected radio waves or radar).

In fact, you could say the brain itself is primarily an inverse problem solving system, an evolutionary artifact of our ancestors’ need to track and model the structure and dynamics of its environments. For the sake of convenience, we can call the distal systems that the brain tracks and models lateral, and the proximal mechanisms that the brain uses to track and model distal systems medial. In these terms, the inverse problem is one where relevant features of lateral systems must be derived via the properties of medial systems. The brain belongs to its environments; it can only track and model reproductively relevant distal systems (predators, food sources, mates, and so on) in its environments via various proximal elements of those environments (visual, auditory, olfactory, and tactile systems) that reliably systematically correlate with those systems. The inverse problem is precisely the problem of the ambiguity intrinsic to those systematic correlations, the fact that an indeterminate number of lateral circumstances can give rise to the same medial effects.

The brain is able to track and model precisely because it is environmentally embroiled. But this also means the brain is physically unable to track and model certain easy-to-demarcate regions of its environment–namely, those medial components primarily engaged in tracking and modelling lateral systems. The brain, in other words, necessarily suffers what might be called medial neglect: light illuminates without being illuminated, eyes see without being seen, the primary visual cortex models without being modelled. You could say solving its distal inverse problem requires neglecting its proximal inverse problem–or in other words, an inability to cognize itself.

The brain, in other words, is blind to its own (medial) functions. Now before tackling the obvious objection that conscious cognition and experience demonstrate that the brain is not at all blind to its own functions, I simply want to point out the way, given the definition of heuristic introduced above, the inverse problem means that the brain itself would have to be a kind of heuristic device even if toolbox theories of cognition were proven incorrect. The mechanistic solution to the inverse problem requires the systematic neglect of tremendous amounts of environmental information, namely, that pertaining to the (medial) mechanisms involved. Given that every heuristic possesses a ‘problem ecology,’ which is to say, a limited scope of application, this provides an elegant ‘mechanistic sketch’ (Craver, 2007, Craver and Piccini, 2011) of what is commonly referred to as ‘cognitive closure,’ the trivial observation that innumerable problems exist that likely outrun our capacity to solve them. As I hope to show, this mechanistic sketch, far from a prelude to Mysterianism, provides a plausible explanation for why the Knowledge Argument lends itself to such an interpretation.

Because the question here, as in Jackson’s notorious thought experiment, has to do with conscious cognition and experience.

.

4) Inverse Metacognition: The Biologist-in-the-Burlap-Bag

The inverse problem means the brain, for basic structural reasons, has to be a heuristic device, one that facilitates problem-solving via the systematic neglect of information. This necessarily limits the kinds of problems that it can satisfactorily solve. Conversely, this means that it will be regularly confronted with insoluble problems, many of which, we might presume, directly bear on the organisms chances of reproductive success. It should come as no surprise, then, that at least one species of brain developed the capacity to access information from ‘beyond the pale’ of medial neglect–or in other words, to tackle the inverse problem posed by itself.

The brain, in other words, faces what might be called a ‘meta-inverse problem,’ one turning on the mechanistic constraints arising from its original inverse problem. Once again, it needs some way of gleaning the information regarding the structure and dynamics of various target systems required to effectuate some reproductively advantageous behaviour. There can be little doubt that this is precisely what human metacognition does: access the information required to solve problems as best as possible given the resources available. But the devil, as I hope to show, is in the details, and the issues raised once one considers introspection or metacognition through the lense of the inverse problem quickly become difficult unto diabolical.

One of the reasons the inverse problem proves so illuminating in this regard is that it clearly characterizes the problem of metacognition in a manner continuous with the problem of cognition more generally, which is to say, in terms of information access and heuristic resources. Far from magically apprehending itself, the brain, at minimum, has to do the same hard work required to track and model its environments. It is, after all, simply more environment, another causal system to be cognized. Not only does this mean metacognition can be mistaken, it means it can be mistaken in ways analogous to environmental cognition (social or natural) more generally. So for instance, a common mistake in environmental cognition involves the perception of aggregates as individuals, as when we confuse swarming ants for, say, spilled paint at distance. One could even argue this ‘confusion’ characterizes all objects period, given modern physics! The fact is, lacking the information required to make differentiations, we regularly cognize aggregates as individuals. On this way of looking at things, the classic problem posed by the ‘unity of consciousness’–the apparent ‘introspective verity’ that famously convinced Descartes to posit experience as an ontologically distinct substance–is simply an illusory artifact of missing information. Conscious experience appears ‘unified’ simply because metacognition lacks the information and/or cognitive resources required to model its complexity.

The salient difference between cognizing swarming ants as spilled paint and metacognizing conscious experience as unitary, of course, is that we can always investigate the ‘spilled paint’ and discover the ants. In other words, we can always stroll up and gather more information. With conscious experience, on the other hand, metacognition has its nose pressed to the glass, so to speak. Lacking any means of varying its information access, the illusion of unity is all that can be cognized, and so comes to seem apodictically true.

This simply underscores the fact that metacognition faces a number of constraints in addition to those suffered by cognition more generally. To consider the profundity of positional or perspectival constraints, merely ask yourself how a wildlife biologist bound in a burlap bag with a bonobo would fare versus one observing a bonobo in the Congo Basin. As bizarre as this analogy may seem, it makes clear the way proximity and structural contiguity, far from constituting cognitive advantages, impose severe information gathering constraints. Certainly there’s certain kinds of information accessible to the biologist-in-the-burlap-bag that are inaccessible to the biologist-in-the-Congo-Basin, but surely the balance, in sum, has to favour the latter. Being wired to one’s target–in this case, components of the most complicated mechanism known–forces the brain to make some drastic heuristic compromises.

Once again, the inverse problem allows us to see why this must be the case. The accuracy (as opposed to the efficacy) of our brain’s medial tracking and modelling of lateral systems depends on the information available to cognition. As we saw above, the inverse problem means that the sensory information is inherently ambiguous: the brain requires vast amounts of ‘contextual’ information to reliably track and model the sources of that information. In the case of the interpretation of the Muller-Lyer illusion given above, the context includes a wealth of probabilistic information regarding the potential sources of retinal images. From the standpoint of the brain, this is what ‘getting perspective’ means: the accumulation of relevant contextual information. If we want to learn more about an apple, say, we engage in information seeking behaviour–or what Karl Friston calls ‘active inference.’ We walk around the apple, we pick it from the tree and inspect it ‘up close,’ we study its constituent parts, or we step way back, observe its life cycle, and so on. We embed it, in other words, within an informatically rich contextual reservoir of prior modelling and successful tracking. The environment provides the relatively limited information our brains need to access, in a timely and relevant manner, the enormous amount of information it has already accumulated.

And if we want to learn more about redness? We can turn to psychophysics, learn a variety of sundry details regarding various discriminatory capacities in a wide variety of contexts, and–? Not much else, it seems, aside from waxing poetic–which is to say, discussing what it is like. Similar to our biologist-in-the-burlap-bag we have no way of ‘getting perspective,’ of accumulating the information required to metacognize redness beyond a certain point. Like the prisoners in Plato’s cave, metacognition is chained to a single informatic perspective.

Given the kinds of neural, sensory, and behavioural resources the brain requires to solve the inverse problem in its distal environments, it seems safe to assume that the inverse problem is largely, if not entirely, intractable for metacognition. The information available is simply too low dimensional. Moreover, given the evolutionary youth of the metacognitive adaptive toolbox relative to the cognitive, it seems safe to assume that our metacognitive heuristics are no more capable of doing ‘more with less’ than our cognitive.

To say the brain cannot solve the inverse problem posed by itself is to say that the brain cannot accurately track and model itself: quite simply that the brain is blind to the fact that it is a brain. And as it so happens, many of the conundrums and controversies you find in philosophy of mind are exactly those you might expect given this model of ‘inverse intractability.’ One might expect, for instance, that metacognition would have tremendously difficulty sourcing its target–as is notoriously the case with the mind-body problem. And since, quite unlike our biologist-in-the-burlap-bag, we are born in our skulls, one should expect this inability to seem as natural as can be. Having no metacognitive access to the limits of our metacognitive access, it seems inevitable that we would run afoul some kind of WYSIATI or ‘What-You-See-Is-All-There-Is’ effect (Kahneman, 2012). One should not be surprised to find, in other words, the paradoxical assumption of complete metacognitive adequacy, or what Peter Carruthers calls ‘self transparency.’

As I hope is apparent (at least as a pregnant possibility), considering introspective metacognition in the light of the inverse problem provides a way to dramatically recharacterize the issues and stakes of the mind-body problem. It does so by looking at metacognition and cognition as variants of the same problem: mechanistically yoking environmental (distal or proximal) information to the production of effective behaviour (the sensorimotor loop). It does so, in other words, by refusing to see metacognition as anything exceptional, as anything more than another set of mechanisms packed in a neural cave with the very mechanisms it is supposed to somehow track and model. And it argues, quite persuasively I think, that the apparent peculiarities belonging to those models (what we take to be conscious cognition and experience) are largely artifacts of information privation and heuristic incapacity.

.

5) Some Philosophical Consequences

Thanks to the inverse problem, the brain is prone to suffer medial neglect, the inability to track and model those (medial) systems tracking and modelling its lateral environments. The brain thus poses a second-order inverse problem to itself, one that it simply cannot ‘solve’ given its astronomical complexity, its structural entanglement, and its evolutionary youth. This inverse intractability, I suggested, reveals itself in numerous ways.

However, a big question floating in the background of the discussion of inverse intractability was one of why. Why, given the intractability of the medial inverse problem, would evolution select for metacognition? Well, because there’s intractable and then there’s intractable. It’s all a matter of degree. Snails, no doubt, find much more of their environment ‘intractable’ than we do, and yet muddle on nonetheless, relying on heuristics far too granular to approximate what we are wont to call ‘knowledge,’ but are effective all the same. We can call these ‘efficacy-only heuristics,’ where constraints on information access (and/or reaction time and/or processing power, etc.) preclude anything remotely resembling epistemic accuracy.

The adaptive toolbox of introspective metacognition is almost certainly filled with these efficacy-only heuristics. It is far more akin to snail cognition than anything the philosophical tradition has assumed, a suite of effective–as opposed to accurate–heuristics matched to a crowd of specific problem ecologies. Since we have no intuitive way of cognizing this, historically we have assumed (as Plato did with his aviary and Aristotle with his nous) that we possess but one faculty. We quite simply lacked the informatic and heuristic resources to do otherwise. In the absence of differentiations (information) we assume identity–which is just to say we get the facts wrong.

For traditional philosophy, the consequences of these observations are nothing short of catastrophic. On the ‘Blind Brain’ model posed here, theoretical metacognitive deliberation requires using tools that cannot be seen adapted to problems that are entirely unknown. When we attend to the redness of the apple, we have no sense of relying on metacognitive heuristics period, let alone ones matched to a far different problem ecology. We simply ‘reflect,’ attend to whatever information available with whatever heuristic resources available, blithely assuming (though the history of philosophy shouts otherwise) the adequacy of both. And so, we leap headlong into speculation, struggling with problems, like the Knowledge Argument, that require metacognitive accuracy, demand their brain accomplish what no human brain can do, namely, solve the second-order inverse problem it poses to itself.

We are now in a position, I think, to turn the Knowledge Argument on its head and to isolate the something about Mary–and perhaps the source of much more confusion aside.

.

6) What’s so Contrary about Mary

What makes a philosophical problem so problematic? Typically, conflicting intuitions. As with the Knowledge Argument, we find ourselves assenting to what seem to be independently cogent claims that are mutually incompatible. In this sense, it almost seems trivial to attribute the problem to some kind of heuristic conflict. Of course the Knowledge Argument triggers incompatible intuitions. Of course, what we (given our metacognitive straits) call ‘intuitions’ somehow involve heuristics. What else would they be?

My initial analogy between the Muller-Lyer illusion and the Knowledge Argument, as strange as it may have seemed, becomes quite obvious once one acknowledges the ubiquity of heuristics. Likewise, the pervasive and profound consequences of metacognitive neglect become quite obvious once one acknowledges the ubiquity of heuristics. Does anyone really think that introspection, unlike any other cognitive capacity researched by the sciences of the brain, will turn out to be as simple as it seems? Of course deliberative metacognition is blind to the complexities of its own constitution and operation. How could it not be?

Isolating the something about Mary, however, forces me to develop a more detailed, and therefore more speculative picture. I’ve spent quite some time roughing out what I think is a plausible, thoroughgoing mechanistic account of the origins of the mind-body problem. Given the constraints falling out of the second-order inverse problem, metacognition is forced to make due with far less information than cognition proper. The ‘mind,’ I have argued, is the brain seen darkly from the standpoint of itself. Conscious cognition and experience as they appear to deliberative metacognition are likely the assumptive product of efficacy-only heuristics.

But as I noted in section (3) above, the brain is itself a heuristic device (consisting of a plurality of such devices), one that overcomes the inverse problem at the expense of medial neglect, that is, blindness to the system of medial mechanisms by which the brain comports its greater organism to its lateral environments. To say that the brain is a heuristic device, however, is to say to it possesses its own problem ecology. Neglecting information means foregoing the capacity to solve various kinds of problems. This suggests the possibility that considerations of the kinds of information neglected will allow us to infer the kinds of problems that can and cannot be solved. As I hope to show, medial neglect allows us to hazard several claims regarding the kinds of problems that lie within that ecology and the kinds of problems that violate it. Not only is the brain prone to trip over its own cognitive shoelaces, it is prone to do so in predictable ways.

The primary and most obvious problem-ecology of the brain is its environment, and quite obviously so. Environmental concerns (social or natural) drive evolution. They command the bulk of brain functions. They even command our phenomenology, the fact that we see apples and nothing of the astronomical machinations that make seeing possible. Our intuition of naive realism, the apparent ‘transparency’ of conscious experience, attests to the environmental orientation of the human brain–or so most everyone assumes.

Since the environment, from a natural scientific standpoint, includes everything, it stands to reason that the brain, as a general heuristic device, has everything as its problem ecology. But this, we have since come to realize, is not the case. Numerous phenomena, it turns out, run afoul what seem to be our ‘intuitive’ ways of understanding the world around us. Everything from statistics to relativity to quantum field theory violate, to greater or lesser degrees, our intuitive ways of comprehending our environments. One of the features that make science so revolutionary, you might argue, is its ability to institutionally and methodologically overcome our heuristic shortcomings.

Now why should this be a discovery? As innocuous as it might seem, I think this question possesses far-reaching consequences. Why is it that we seem to implicitly assume the universal applicability of our cognitive capacities? The obvious answer has already been discussed: metacognitive neglect. Given that we generally have no intuitive inkling of the kinds of tools we happen to be using to solve a given problem, how can we have any sense of their various scopes of applicability? Obviously we can’t. A corollary to the ‘default unity’ of cognition, one might surmise, is the default universality of their application. In the absence of information allowing us to differentiate between various scopes, metacognition simply assumes one scope.

This, I think, is what makes the first premise of the Knowledge Argument so intuitively plausible. We have, thanks to metacognitive neglect, no access to information pertaining to the limits of discursive knowledge, and thus no inkling that any such limits exist. Thus we run afoul default universality, the blind assumption that discursive cognition is in fact capable of ‘solving all physical information pertaining to the perception of colour.’ This sets the stage for Mary’s subsequent experience of colour. It seems obvious that Mary gains some kind of information experiencing red for the first time. Thus we find ourselves confronted by the spectre of the nonphysical, of information pertaining to something ‘over and above’ the physical. The two ‘lines,’ you might say, appear quite different.

Of course, many critics of the Knowledge Argument have targeted the universality of this first premise. Dennett (2006) poses the thought-experiment using ‘RoboMary’ rather than Mary, an artificial intelligence that can, in the course of accumulating all the physical information to be had regarding colour vision, runs first-person simulations of the experience. This way, when RoboMary encounters red in its environment for the first time, there can be little doubt that she isn’t learning anything new. For these critics, the Knowledge Argument is more a verbal trick than a profound philosophical puzzle. As Dennett (1991) writes:

The crucial premise is that “She has all the physical information.” That is not readily imaginable, so no one bothers. They just imagine that she knows lots and lots–perhaps they imagine that she knows everything that anyone knows today about the neurophysiology of colour vision. (399)

The primary reason so many fall for Mary, in other words, is intellectual laziness. But he later concedes that he had underestimated the force of the thought-experiment–or as he puts it, “the allure of this intuition pump,” (2006). But rather than consider the possible natural basis of these intuitions, he confines his examination to the question of whether they are plausible. Is it plausible that Mary, possessing all the physical information possible regarding colour, will be surprised when she finally sees red? Is it question-begging to assume that Mary’s first person experience of colour must be a simple of some kind and not a functional aggregate?

The approach taken here, however, is somewhat more interested in what the Knowledge Argument says about us than what it says about physicalism or the Hard Problem more generally. The reason so many assume that Mary will be surprised when she finally sees red is that they think it possible for Mary to have all the physical information pertaining to red without experiencing red. The reason they think this possible, however, has little or nothing to do with any disinclination ‘to do the hard work of imagining.’ On the account given here, they think this possible because metacognitive neglect makes it entirely natural to assume the universal applicability of discursive cognition. And typically, something more compelling than accusations of laziness are required to convince people to abandon what seem to be forceful intuitions!

But even if we agree that discursive cognition, as necessarily heuristic, only seems to be universally applicable, this only means that the intuition of universality is deceptive, not that the first premise of the Knowledge Argument is false. It could be the case that all physical information regarding colour vision does in fact lie within the problem ecology of discursive cognition, which is parochial in some different respect. In other words, pointing out the role metacognitive neglect plays in faulty assumptions of heuristic universality is not enough to argue, as I am, that the Knowledge Argument amounts to a discursive analogue to the Muller-Lyer illusion, an example of what happens when atypical cues trigger the deployment of heuristics out of school. All metacognitive neglect establishes is that it is entirely possible that this is the case, nothing more.

The problem ecology of the brain does not include everything. Nevertheless, the primary problem ecology of the brain is environmental: cognition is primarily oriented to the solution of environmental problems, just not all such problems. So which problems make the heuristic cut? Science clearly indicates what might be called ‘scale bias’; the further scientific discovery takes us from the scale of our workaday problems–to the extremes, say, of astrophysics on the one hand and quantum mechanics on the other–the more counterintuitive it seems to become. But as cognitive psychology has discovered, a great many problems on the scale of the everyday also seem to fall outside what might be called our ‘Goldilocks problem ecology,’ the zone of what is most readily soluble.

And Mary, I hope to show, is precisely such a problem.

.

7) The ‘Something’

What allows the brain to (mechanically) engage its environments is the fact that it is (mechanically) embroiled in its environments. This necessitates medial neglect, or blindness to those (medial) portions of the environment engaged in tracking and modelling those (lateral) portions of the environment tracked and modelled. Medial neglect, however, is by no means absolute. To the extent we can metacognize anything at all, our brains do track and model their medial tracking and modelling. The question is how much and how well? In section (4), I spent quite some time laying out why metacognition has no hope of accurately solving the second-order inverse problem the brain poses to itself, why it was forced to rely on what I called ‘efficacy-only’ heuristics. The key, once again, is to refuse to allow for any kind of ‘magical problem solving,’ to see metacognition as operating according to the same mechanical principles as cognition more generally, only with far less resources, far less evolutionary tuning, and a far more problematic target. In section (5), I discussed the philosophical consequences of this, how theoretical metacognitive deliberation amounts to using tools that cannot be seen adapted to problems that are entirely unknown.

This offers a fair assessment, I think, of our cognitive straits when we encounter the Knowledge Argument. No one knows what heuristics the thought experiment triggers how. A fortiori, no one knows the problem ecologies corresponding to those heuristics.

So here’s what I think is happening. We are inclined to agree that Mary can learn all the physical information regarding the neurophysiology of colour simply because, thanks to metacognitive neglect, we are prone to think our cognitive capacities (as we intuit them) are simple rather than fractionate, and therefore universal in their application. Mary’s subsequent exposure to colour and the introduction of first-person information violates that intuition of universality. So it seems as though we must either deny the intuitive appeal of the first premise, as Dennett advises, or allow a second, distinct order of being into our ontology, the phenomenal or the mental or what have you.

The attraction of the latter turns on the environmental bias of our cognitive resources. When we reflect on experience, the idea (also argued by Eric Schwitzgebel in his excellent Perplexities of Consciousness) is that we are relying on the same set of resources we use to troubleshoot our natural and social environments. Deliberative theoretical cognition treats efficacy-only metacognitive information as just more efficacy-and-accuracy environmental information: we submit conscious experience, in other words, to heuristic machinery adapted to problem-solving the objects of experience. The drastic difference in ‘formats’ (low-dimensional efficacy-only versus high-dimensional efficacy-and-accuracy) suggests, as it suggested to Descartes so long ago, that we are dealing with a drastically different order of being. Given the biologist-in-the-burlap bag dilemma facing metacognition, we have no way of intuiting otherwise, no metacognitive access to information regarding the inadequacy of the (efficacy-only) information that is available.

What makes the thought-experiment so bewitching, then, is that it simultaneously caters to two complementary intuitions arising from the substantial, but entirely invisible to intuition, limits faced by introspective cognition. It triggers the presumption of universality falling out of our inability to metacognize or intuit our adaptive toolbox. It seems natural to assume that Mary can learn all the physical information regarding colour vision. It also triggers the presumption of environmental reality that arises as a result of submitting metacognitive (low-dimensional efficacy-only) information pertaining to conscious experience to cognitive systems adapted to environmental (high-dimensional efficacy-and-accuracy) information. It seems that her experience of red has to be ‘something,’ given that it indisputably provides Mary with information of some kind over and above the physical information she already possesses.

The thought-experiment becomes an intuitively compelling argument for dualism and against physicalism because of the way its information structure plays on our metacognitive shortcomings. This is basically what the depth-heuristic theory presumes of the Muller-Lyer effect: the information structure of the lines presented trigger the application of learned visual heuristics outside of their adaptive problem ecologies, leading to the apparent perception of two different lengths. Likewise, the information structure of Mary generates the apparent cognition of two fundamentally different species of information by triggering the inappropriate application of heuristics recruited via deliberative cognition. One might suppose the effect is so much more vivid in the case of visual shortcomings because of the extravagant resources expended on vision. The computational expense of the accuracy it provides is balanced against the evolutionary advantages arising from the different problems it allows our organism to solve. The illusion is obvious because vision is heuristically high-dimensional. With Mary the situation is much more murky simply because metacognition is so low-dimensional and ‘efficacy oriented’ in comparison.

.

8) Life After Mary

All of the above is hypothetical of course. The very metacognitive insensitivity to our heuristic complexity that my argument turns on means that I can only speculate on the intuitions and heuristics involved. As it stands, the sciences of the brain remain far too immature, I fear, to submit these claims to much credible experimental scrutiny at present. All things being equal, the situation is likely even more complex than my complications suggest, and only seems as simple as presented here for the want of adequate metacognitive information. The fractionation and complication of our metacognitive assumptions has been the decisive trend. But I think a more complicated descriptive picture, inevitable as it may be, would do far more to extend my normative case against the Knowledge Argument than otherwise.

The reasons for this fall out of the consequences of the heuristic brain. To say human cognition is ‘heuristic all the way down’ is to say all human cognition is parochial, adapted to specific problem ecologies. And this suggests something that many might find extraordinary. Some might have caught a whiff of this ‘something extraordinary’ above, when I suggested that the attribution of ‘environmental reality’ to conscious experience was a kind of heuristic misapplication. If conscious experiences aren’t ‘real,’ one might legitimately ask, then what are they? They have to ‘be something,’ don’t they?

Perhaps not, no more than fundamental particles have to ‘be somewhere.’

It should be evident to anyone who carefully considers the challenges faced by neuroendogenous metacognition that accuracy is simply not a plausible neurocomputational possibility. Efficacy-only heuristics are all but assured. The alternative is a brain that has solved the inverse problem of itself–a magical feat presumed by many and yet explained by no one. And yet, to say that metacognition cannot be accurate, even though it can be efficacious (albeit for problem-solving tasks we know nothing about), is to say, quite literally, that no matter what the entities metacognized, there are no such things. Prudent and conservative the supposition of metacognitive efficacy-only heuristics may be, but it has the radical consequence of entailing the nonexistence of things like affects, colours, and so on. When the problem of these things is posed to deliberative cognition, our brains (via some occluded heuristic complex) almost inevitably judge them to be real, entities akin to those it was primarily evolved to track. Conscious experience as I endure or enjoy it simply has to exist. What is more, since it appears to be the lense through which my environments are revealed, it even seems to possess, as the phenomenological tradition so famously claims, a kind of existential priority. Short of experience, we quite naturally think, there could be no knowledge of the world.

Indeed this is the case, even though there is no such thing as ‘conscious experience.’ One way to put this would be to say that although we certainly experience the world we have never, not once, experienced ‘experience.’ Experience is not among the things that can be experienced, though our heuristic predicament is such that we continually hallucinate that this is the case. Why? Because we have no other means of metacognizing the information available. We cannot but mistake experience when we reflect upon it, because we have no other way to reflect on it. We have no other way of reflecting on experience because our ancestral brain, overmatched by its own complexity, abandoned accuracy and resorted to efficacy to overcome the challenges confronting it.

But then, you might ask, What are my misconceptions? Surely my misconceptions must ‘be something’! How can I ‘have them’ otherwise? I admit this sounds appealing, but all it actually does is repeat the same mistake of submitting efficacy-only information to efficacy-and-accuracy heuristic machinery. Your misconceptions, while certainly more than ‘nothing,’ are simply not ‘somethings’ in the sense possessing environmental reality. You are misconceiving your misconceptions if you think otherwise.

Of course, any number of cognitive reflexes, learned or innate, will balk at this assertion. But in a strange sense, your cognitive discomfort is the point. What we require are ways to think around what we find most intuitive in order to think accurately about what is going on. We need to learn new heuristics. Part of this includes learning to appreciate the intrinsic parochialism of what seem to be our most fundamental concepts, the nontrivial fact that all our ways of knowing involve the systematic neglect of certain kinds of information–or in other words, heuristics.

Our intuitive tendency to assume the universality of ‘environmental reality’ is a consequence of our metacognitive blindness to the consequences of medial neglect. We cannot cognize the information missing, therefore we cannot cognize the ways that missing information limits the scope of problems that can be solved. Medial neglect, as a structural consequence of solving the inverse problem, inevitably restricts the kinds of problems that can be solved to the external environment. The heuristic brain can puzzle through small engine repair because the tracking systems do not appreciably interfere with the system tracked. The medial mechanisms that enable small engine repair do not impact the lateral mechanism that is the small engine. Behaviour intervenes from the outside, as it were. The dimensionality of the problem explodes when those medial mechanisms become functionally entangled, when they impact their distal targets–as with so-called ‘observer effects.’ The degree that tracking/modelling interferes with the system-to-be tracked becomes the degree that the latter becomes noisome. As anyone who has lost a fistfight knows, observer effects catastrophically complicate the inverse problem. You move, it responds. Action begets reaction begets reaction, and so on. Once the brain becomes functionally entangled in this manner, proximal effects no longer convey effective information regarding the system to be tracked so much as far less effective information regarding the greater system that takes it as a component.

The effective problem ecology of the ‘reality heuristic,’ in other words, requires the functional independence of the systems tracked. Thus, the heuristic assumes some degree of functional independence, asserts of the information provided that the problem-to-solved is another environmental thing. Thus, the homunculus fallacy (or as Dennett recharacterizes it, the Cartesian Theater), the manner in which our attempts to troubleshoot consciousness and the mind effortlessly assume the structure of our attempts to problem solve our environments. And thus the miasma called the Philosophy of Mind: the more functionally entangled the systems tracked are in fact, the less applicable/effective the ‘reality heuristic’ becomes. Since we possess no intuitive way of cognizing this limitation, we intuitively assume that no such limitation exists, we think, once again, that what we see is all there is. This is why we find it so appealing to ‘reify’ experience, to transform the ‘inner’ into a version of the ‘outer,’ even though, as the history of philosophy has shown, it amounts to an obvious cognitive dead end. Like patients suffering Anton’s Syndrome, we are utterly convinced that we can see, even though our every attempt to navigate our visual environment ends in failure.

So then, just what is conscious experience?

Call it ‘virtual,’ if you wish, a kind of mandatory illusion arising out of deliberative metacognition, then try to game ambiguities in an attempt to salvage what you can. Or embrace a kind of quietism about consciousness, and avoid the topic altogether. Or continue (as I do) muddling through the information integrated and metacognized with a new and radical suspicion, one cognizant of the fact that the picture that science eventually delivers will–all things being equal–contradict our intuitive, traditional understanding of ourselves every bit as radically as it contradicted our traditional understanding of the cosmos…

That ‘noocentrism’ is as doomed as geocentrism.

A suggestion: section 7 is a bit obscure without the stuff discussed in section 8. I expect someone not familiar with your writing would be even more confused. And of course I had to read all this just before going to sleep.

Was there anything specifically that caused you problems? I reread it, and I couldn’t see where 7 begged 8.

you have the time to write this but not to give any info on your book?

And what info would that be beyond what I’ve already given? And what makes you think I wrote this all at once? It’s been in the blocks for months.

Classic!

YORB, the books in a kind of fine wine mode right now – ie, rewriting to simply refine it. No doubt if Scott was forced to publish it right now, we’d all really enjoy whats already there. But it’s going through a refinement stage to add even more nuance to it. You don’t have to worry that the books not written – the book exists (as far as I understand it). The present is under the tree, so to speak – just a matter of waiting for christmas!

Hey.

First, I found this much more clearly written than prior posts on BBT. So that’s nice.

I have a few comments. With regards to The Mary Problem, I’m reminded of a comment made by a neuroscientist colleague of mine regarding philosophical “problems” of that nature, which is, “You just have to say ‘No’ early and often.”

In this case, the “no” comes in at “…and acquires, let us suppose, all the physical information there is to obtain about what goes on when we see ripe tomatoes, or the sky, and use terms like ‘red’, ‘blue’, and so on.”

No. Let’s not suppose, since it’s just fucking stupid to do so. This is why I don’t read philosophy of mind anymore, unless it’s blogged about by a fantasy author whose books I like. Because the “problems” are almost always a waste of time that can only bottom out in a pointless semantic debate about what “all the physical information” means (including “all”, and “physical” and “information”).

Why would we imagine that we can think usefully about how a person who knows things we ourselves don’t yet know would react in this situation? But I guess this is why I often miss the point of BBT – I often already assume aspects of it, in a sense. In this specific case, I assume that our intuitions about “problems” like this are worthless.

What I do like about this account is that it provides a take on just why these dipshit armchair “problems” tend to take people for a ride, and how. That aspect of the explanation seems plausible to me, and represents value-added.

There seems to be some tension between the idea that “introspective philosophy” could work, but we just have the wrong kind of brains for it, and the simpler idea that trying to learn about things about the world without actually going out into the world to test and measure them (i.e., science) is just kind of dumb, lazy, and pointless in general. I tend to emphasize the latter, while BBT seems to insist on the former. I am astonished, honestly, that people considered to be smart could waste so much time on things like The Mary Problem, or the Chinese Room, etc.

I am less convinced that our metacognition is as radically impoverished as is sometimes suggested in BBT. I think a lot of human intelligence is oriented around competing and cooperating with other people, and our theories of mind are geared for this, not for The Mary Problem and the like. I think a case could be made that metacognition is not bad for modeling other minds, if not our own brain (e.g., we have absolutely no introspective sense of where in our skull our various mental faculties reside). This is, I think, the “problem ecology” of philosophy of mind, in practice, if not in academia, and I think it may be worth a bit more than BBT seems to give it credit for.

I think the ironic thing is that while each of us may have a wildly inaccurate “folk model” of the self, or the mind, etc. we may be quite correct in assuming that other humans, with brains like our own, share very similar models. As a result, we are forced to use it when interacting with other brains because the folk model is quite effective at predicting the behavior of other people. Fiction writing in general relies on such models, I would think, to be effective (i.e., “moving”, “realistic”, etc.). We find certain forms of “accuracy” repellent (e.g., the action hero gets suddenly, “arbitrarily” sidelined with pancreatic cancer).

Finally, I would like to know what implications there are for BBT that are not merely “philosophical”. This morning I was listening to a TED talk about problems with eyewitness testimony, and the fact that people believe that estimates of confidence by eyewitnesses really mean something, when evidence suggests that they don’t. The implications for our legal system are obvious. Are there similarly concrete implications for BBT that don’t piggyback on the empirical findings invoked by it (i.e., Kahneman, Ariely, etc.)? How should we educate people differently about what they “really” are? How would it impact ethics?

Cheers.

Those are some damn good questions. The question about practical ethics (I’m assuming you would agree with me that ‘meta-ethics’ is another one of these ‘lazy-dumb’ pursuits) is one that I’m really only beginning to explore. I do think it would be quite easy to research predictions like the ‘only game in town effect’ or the ‘out of play illusion’ that fall directly out of BBT, and that understanding these would be significant for the same reason so many in economics and finance (in addition to engineers) are looking closely at ‘unk unk’ problems. But I am, in the end, just a wanker, so there’s no much more I can do is offer vague directions for experimentalists to go in. The big thing BBT does, I think, is the very thing you point out: it disabuses philosophers of their ‘only game in town’ assumptions when it comes to these and a host of other issues.

I entirely agree with what you say regarding the power and efficacy of ‘mindreading’ heuristics – I actually cut a section dealing precisely with these issues because the considerations weren’t germane to the general argument. It’ll probably resurface in a couple months time in some other vehicle. The point I’m making throughout (and it’s one that’s been made by others, like Dan Dennett and Robert Cummins, only in more conceptually constrained registers) is the importance of the distinction between efficacy and accuracy. It’s not that certain heuristics are ‘impoverished’ (though I admit my rhetoric too often slides in that direction), but that they are focussed, and often incredibly powerful as result when they are used in a manner consistent with their problem ecology. So I agree that social heuristics are incredibly powerful, when used to socialize. As soon as we begin to theoretically reflect on our ‘social intuitions,’ however, we’ve jumped overboard – become lazy-dumb. There are just no such things as ‘norms,’ ‘purposes,’ ‘goals,’ and the like. They’re useful posits to get by with when we find ourselves explaining/manipulating/predicting our fellows, but we are simply begging for trouble (what is presently called ‘philosophy’) when we think we can ‘pin them down’ the way so many philosophers (especially pragmatists and inferentialists) are so prone to do. What are they, then? Well, at this cartoon level of resolution: efficacious heuristics that leverage their power by neglecting the very information required to cobble together anything remotely resembling an accurate picture. That’s what they are. BBT then takes the further step of arguing how we can parlay this understanding into a broader account of why philosophy of mind suffers all the problems that it does. And in doing this, I think anyway, it kicks open the conceptual door for neuroscience to impact every corner of the human. It gives philosophy a run for its money in domains where it once seemed the only game in town. At some point, if its confirmed, it could bury traditional philosophy altogether.

“This is why we find it so appealing to ‘reify’ experience, to transform the ‘inner’ into a version of the ‘outer,’ even though, as the history of philosophy has shown, it amounts to an obvious cognitive dead end. Like patients suffering Anton’s Syndrome, we are utterly convinced that we can see, even though our every attempt to navigate our visual environment ends in failure.”

But Scott isn’t that the problem in a nutshell, that consciousness is not a tailored individual trait but a trait that evolved for social survival. Just about everything in our world was created by individuals we never knew or met but everybody takes individual possession either in a positive or negative sense. Language and behavior is something we acquired from our environment with little conscious thought however our behavior is easily modified by scolding, promise of rewards or rumors of danger. We even select our specialists so some are skilled doctors, teachers and specialists in swarming ant recognition. We really don’t see the total picture and language is intentionally ambiguous to protect within the groups or between the groups. Little wonder we baffle about consciousness as an individual trait when our individuality is the least accessible to ourselves but so obvious to others.

Also to me the Mary argument underscores that when we introspect our nervous system easily becomes a house divided. Instead of Mary the neuroscientist we have Mary the classical composer who one day discovers Beethovens 212 Symphony; she reads the musical score but has never heard it performed. The first time she hears an orchestra perform it does she learn anything new? Well it is easier to say no because sound and music is so direct as opposed to color perception which involves more inner translational heuristics or more characteristic of the house divided paradigm.

Great points all. I actually think smell works even better than music, simply because smell is even less ‘transparent,’ less a victim of medial neglect.

Let me give a little variation on Mary: A few weeks before seeing red for the first time a group of scientists discover Mary’s dilemma. They begin to communicate with her via phone and through the Internet via a monochrome display. Finally when Mary sees Red the conversation goes something like this:

M: ooh! Aah,! Wow!……

S: You see Mary!

What more can they say? Because language itself all along has had this limitation or it comes back to what We blogged about last year, Steven Harnad’s Symbol Grounding problem.

As an engineer I do not think they have the structural problem solved or they believe the brain alone is the seat of consciousness and meaning. Is the heart the circulatory system? The mind-body problem is the human central nervous system problem or the brain (in the head) – brain (in the lower part of the head and body) problem as I see it. Just like hearts did not evolve as standalone organs, neither did brains. Brains in simpler life forms like insects are easily STRUCTURALLY IDENTICAL TO OURS……if we approach from the sensorimotor POV. Blind Brain/Explanatory Gap/Cognitively Closed/We Could Be Zombie Insects Problems all stem from the Why Do We Have Language-Meaning Problem. My theory is that our brains were Rooted in our bodies along the path of evolution just like every other gravity defying sensorimotor creature like ourselves but the language-meaning comes about because of the more complex layering of the neocortical sheet which syncs and sources the body it is rooted in. Why do I think this is correct? because it still fits the fundamental stimulus-response model of all biological organisms.

I’m not really addressing the topic, but the Mary scenario seems to suffer from use of an absolute knowledge in it’s descriptor – ie, ‘red’. It’s like if I said Mary had learnt all the textual knowlege we have in existance back to front on the matter of orgasms, but she’s never had one…what I could do is screw up the descriptor by then saying ‘she has an orgasm’. But that’s invoking absolute knowledge in the description itself. If I instead said after *heh* various physical stimulations she had a heightened arousal and then a high peak of pleasure…well, now were open to the question of ‘Would Mary, after all her learnings, call what she went through an orgasm?’. Because what if she said no? Instead of the narrator of the scenario deciding it for her.

Same goes for ‘red’.

Hi Scott,

Before I go on rambling, let me ask you a quick question…why aren’t your books available on Amazon Kindle International (Croatia, specifically)?

They don’t show up in results and I had to go through US proxy (change IP address basically) to see them available.

Real shame, because I have been enjoying them immensely and recommending them to anyone and everyone.

I have seen them sold here in Croatia (paperback), hell I bought The Judging Eye in paperback here, but there’s (almost) nothing on Kindle.

Now, for the text above. I’m no philosopher, so I may not know a lot of definitions, terminology and trends in modern philosophy.

I have always been, however, very curious about the two basically fundamentally different aspects of our perception – I’ll call them “data collection” and “data presentation”.

Since I’m an engineer, the sensory input our body and organs provide is something that I can easily understand. Our senses, collected by various sensors, are transformed

to electrical signals and transferred to our brain for processing. Most of our own tecnological wonders work in the same way, actually.

So we have signal feed and it is quantitative – it can be measured, drawn, anylized…quite mathematically, ergo explained and reproduced.

How do we perceive it? Quite qualitatively – what we see, smell, hear, etc. cannot be quantitatively described in any sensible or precise fashion.

It is possibly quite the opposite from the signal that is fed into our brain. You can explain how the eye differentiates the colors.

You can explain how it’s converted to nervous system signals. You can even craft an electronic camera that serves as an crude eye replacement. You cannot explain what

color red is. Sure, you can describe it the way our senses catch it (as a specific frequency in visible spectrum), but that is not the way it’s presented to us at all.

We use words to describe what we sense (i.e. blue, red, bright, dark, rough, etc), but these are only established terms (agreed on by the multitide of people), not something

that can be measured or reproduced like the signals our nervous system feeds to our brain.

For example, we cannot prove nor establish that the color we both call red is in fact perceived the same by both of us.

I may be saying red because i was thought that “that color is red”, however I may percieve it totally differnetly.

And we may never, ever know if this is true or not.

It has to do on how our brain works. The heuristics problem discussed above is well connected to it.

We seem to be experiencing only a fraction of our own “thinking”, that is called conscience/cognition.

All other singnals (thoughts) are dimmed in the background. It has to do with how neural matrix works, but damn

if I know how conscience rises from it. Neural networks can be mathematically explained (quantitatively) and our senses (and other stuff, like emotions)

cannot. Sure, some will say – there is a sound mathemathical, neurobiological principle that works “in the background” that makes us see the world as we do.

That precisely is the problem. It’s the missing link. The link between our quantitative brain engineering (say hardware if you will) and our qualitative cognition and

thought process. How do they translate into one another? How do you convert from something purely mathemathical to something purely descriptive?

Further on, I have read somewhere we do not in fact “forget” anything we’ve ever seen. I do not know if this is correct or not, however, it seems plausible enough.

The way neural networks work is by repeating data. The neural pathway that’s seen “most use” will become prevalent over others, if only slightly different.

It is of no wonder, then, that we have all the data in our brain, just are unable to access it (repeat a path through our brain)? Or the fact that there might be nothing

harder than changing oneself (I mean truly, not just superficially)? You are down a well trodden path. It is the same with sensory data – what we percieve on daily basis

is made of objects we learned as kids, similar to how every rendered scene in every CGI nowdays is made of triangles. I think it is possible to truly shock our own

brain by giving it something radically new (a deep-space walk comes do mind).

So yes, the brain is heuristic indeed. From our own perception, our thinking and decision-making processes are nowhere near a quantitative, mathemathical approach.

Come to think of it, quantitative is not the way we think at all. You have to force yourself to think that way, to add, substract, to keep track of all the variables,

or to think procedurally at all.

Sorry if I derailed from original topic too much, but I feel that a large portion of our own brain functions will stay hidden unless we explain the quantitative -> qualitative gap.

One second, you are happily in the realm of the mathematics, to the point you are making mechanical replacements for your body parts. One moment later, you are describing abstract decision

making with not only incomplete data, but further, with no kind of idea how this data is modelled at all.

I’m not sure why they wouldn’t be available, MS, but I’ll certainly ask my agent. BBT actually answers many of the important questions you pose here. Depending on your interest (and patience!) you might find it useful rooting around through the previous years posts.

In that case I owe you a double thanks. First, for asking your agent, and the second for giving me some more brain foodstuff to chew on (no, I’m not a zombie!).

I actually never knew about this theory of yours, so here I go to dig into it…

SB, you talk a lot about accuracy in this article. I wonder what the counterintuitive, nonsemantic definition of “accuracy” is, the definition that assumes the mind is made up of heuristics (i.e. processes that get jobs done in half-assed ways). If you’re going for a use theory of meaning or one that reduces the aboutness relation to natural meaning (information), then we’re talking about causal relations. A signal indicates its source, because the source causes the signal. For example, the rings in a tree trunk indicate the tree’s age, because there’s a causal relationship between them.

So some heuristics may cause what you’re calling accurate models of the exterior, while other heuristics may cause illusory, mistaken (but intuitive) pictures of the interior. But what I struggle to wrap my mind around is how we can understand the difference between the two without the semantic or normative distinctions in our back pockets.

There’s actually nothing ‘half-ass’ about heuristics. The work in eco-rationality has proven that they are often more effective than so-called optimization methods in addition to being far more robust and computationally efficient. All ‘accuracy’ means in this context is pluripotency or ‘broad band efficacy.’ It all turns on the amount and kind of information provided. Causal cognition is heuristic, but it stacks the efficacies very high, to the point where it can allow us to actually ‘get behind’ causal and intentional cognition.

I wish I could convince you to abandon this performative contradiction stuff. I understand its intuitive appeal, but it really is a nonstarter. Insofar as all our cognitive activity turns on our brain and insofar as our brain is another natural mechanism, and so long as I have some plausible empirical account of how our faulty, second-order intuition of semantics or normativity arise from that mechanistic activity (namely, BBT), there’s no transcendental tack you can take that will get you what you want. You have to go empirical, offer a counter-hypothesis of how intentionality arises as a real emergent product of the brain’s mechanistic activity. At which point, I’ll happily go toe-to-toe with you assessing the relative virtues and liabilities of the competing theories. This is what I take the power of BBT vis a vis traditional philosophy to be: it forces it to ‘go natural.’

But all that said, there IS a real problem lurking somewhere in your unease I think. I feel problems everywhere, even as I remain fascinated by the theory’s ability to drastically rewrite (naturalize) the traditional (transcendental, armchair) terms of the debate. I watched the Boston-Pittsburgh game with Sheldon last night, and he managed to convince me that there’s more to the ‘triviality’ problem than I credited. Was Sheldon a classmate of your’s Ben?

I wasn’t trying to raise the self-contradiction problem. I’m trying to see how “drastic” BBT’s rewrite of the terms is. Of course, if it turns out that all genuine ways of understanding “accuracy” employ semantic concepts, and yet BBT says all those concepts are no good, then any empirical theory making use of those concepts to radically alter the debate must itself offer only an illusion of understanding.

That’s one side of a dilemma. The other is that if we have a radically new way of understanding “accuracy,” one that’s kosher according to the counterintuitive implications of BBT, we mere primitive humans are going to have a very hard time comprehending that new viewpoint. (Or maybe I should just speak for myself!) For example, you say accuracy is just super-duper causal power. First of all, that actually agrees with what I said, that the explanans of BBT can make use only of concepts of causal relations. But second, how on earth is accuracy the same as some causal power? Surely, that’s a reduction that’s lacking a new theoretical paradigm.

I do see plenty of radical implications of BBT. But I nevertheless worry that when you look under the hood, at some fundamental concepts of the theory, like “information,” “heuristic,” “mechanism,” or “accuracy,” you find all-too intuitive, semantic concepts after all, to which BBT isn’t entitled and which threaten to undermine BBT.

Do you agree, at least, that if BBT uses concepts in its explanans which come out as illusory in BBT’s explananda, BBT eats its own tail, regardless of how empirical that theory may be?

I don’t recall a Sheldon, but it’s been a while.

It all comes down to the self-contradiction charge, doesn’t it? It’s the second-order characterization as ‘irreducibly’ or ‘inescapable’ intentional that’s the problem. It’s your assumptions regarding what these things are. BBT doesn’t say ‘accuracy’ is ‘no good,’ only that traditional semantic concepts of accuracy turn on cognitive illusions. It’s the ‘Design caveat’ in evolutionary theory writ large. All I’m saying, pace Darwin, is that it’s all mechanical at root, that there is no magical telos or ethos or seme or what have you, even though when we deliberate on our understanding of complicated systems, these are the things we’re convinced we see – and you seem to be convinced have to be real because of it! I’m using all these concepts with the understanding that they are heuristic mechanisms, exploiting environmental information structures in various ways – in a way consistent with the natural sciences. You’re on the magical limb here, Ben! I’m just on the limb less travelled.

As for the ‘new theoretical paradigm’ that’s the point of developing post-intentional philosophy. Exploring! Figuring out the ways all the ancient verities actually hang together. You can cross your fingers and hope neuroscience does tradition a solid, but I wouldn’t hold your breath!

As we’ve talked about before, the reaction I keep having to BBT isn’t just that I think of the self-contradiction problem; it’s that I see a dilemma here. I think I understand your point that what looks like a guaranteed self-contradiction is actually a stubborn projection of traditional categories onto what’s supposed to be a counterintuitive theory. And yes, I agree that BBT could be discussed at a meta-level using those very categories, just as biologists can use (technically-forbidden) teleological shorthand to talk about adaptations. But biologists have Darwin’s theory of natural selection that has provided a new paradigm, and when you look at that theory you don’t find any hidden appeals to design. As Dennett says, you find natural laws being carried out by complete dummies. (Actually, he says it’s an algorithm, but I think the use of that term does make a hidden appeal to intelligent design, so it had better not be crucial to natural selection. The same goes for “function.”)

BBT’s counterpart to natural selection is a set of concepts, like heuristic, information, mechanism, and accuracy. These are some first-order terms of the theory itself, the paradigm-shifting, mind-blowing concepts that are supposed to show how one thing is really something else. Instead of being a conscious, autonomous person, a mind is really a bunch of heuristics and mechanisms that produce an illusion when it reflects back on itself.

The dilemma argument proceeds by asking for the reductive definitions of BBT’s key terms (whatever the key first-order ones are). Now notice another difference between BBT and natural selection. We could understand Darwin’s theory, even though it was revolutionary, because the theory wasn’t primarily about the human mind, and we’re not so innately limited in the way we understand other species. BBT is about our mind, so if its concepts turn out to be radically impersonal, we may have a harder time understanding the theory even if the theory is supported by experiments. The situation would then be similar to quantum mechanics.

Again, I’d like to know if you agree with the following statement: in principle, if BBT uses concepts in its explanation of the mind which have no more content to them than what’s given by what BBT regards as the illusory, intuitive view of the mind, BBT itself isn’t so radical; it doesn’t explain how the mind as intuitively understood is really something else. It seems to me obvious that that statement is true, so the only question is whether BBT’s key concepts have radically counterintuitive definitions. BBT must offer an alternative way of looking at the mind, just as natural selection isn’t the same as a personal God.

So do you define the fundamental concepts in some of your writings? My suspicion is that the definitions of those key terms is mixed. If we eliminate any trace of intuitive notions from the definitions, the terms won’t work as hard in the theory, but they do still offer tantalizing hints of a radically impersonal self-understanding. Or perhaps I’m just stuck in the matrix so I can’t see the code by which BBT’s reduction works.

Anyway, the problem for me is that BBT’s technical terms are at least ambiguous: there are intuitive and hopefully counterintuitive definitions of them. I’d just like to confirm that those definitions are actually ambiguous.

The attribution of ‘hidden appeals’ are tricky things, but they are endemic in philosophical critique.