(Originally posted September 24, 2013… Wishing everyone a thoughtful holiday!)

The Amazing Complicating Grain

On July 4th, 1054, Chinese astronomers noticed the appearance of a ‘guest star’ in the proximity of Zeta Tauri lasting for nearly two years before becoming too faint to be detected by the naked eye. The Chaco Canyon Anasazi also witnessed the event, leaving behind this famous petroglyph:

Centuries would pass before John Bevis would rediscover it in 1731, as would Charles Messier in 1758, who initially confused it with Halley’s Comet, and decided to begin cataloguing ‘cloudy’ celestial objects–or ‘nebulae’–to help astronomers avoid his mistake. In 1844, William Parsons, the Earl of Rosse, made the following drawing of the guest star become comet become cloudy celestial object:

It was on the basis of this diagram that he gave what has since become the most studied extra-solar object in astronomical history its contemporary name: the ‘Crab Nebula.’ When he revisited the object with his 72-inch reflector telescope in 1848, however, he saw something quite different:

In 1921, John Charles Duncan was able to discern the expansion of the Crab Nebula using the revolutionary capacity of the Mount Wilson Observatory to produce images like this:

In 1921, John Charles Duncan was able to discern the expansion of the Crab Nebula using the revolutionary capacity of the Mount Wilson Observatory to produce images like this:

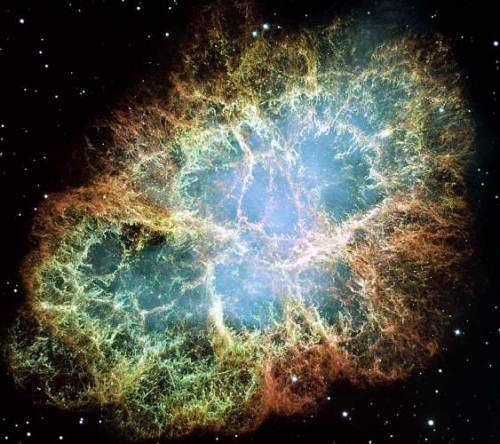

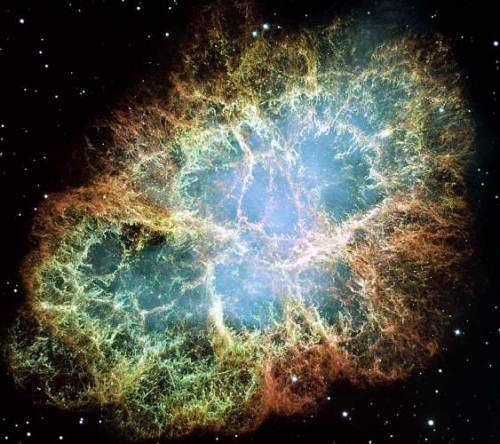

And nowadays, of course, we are regularly dazzled not only by photographs like this:

produced by Hubble, but those produced by a gallery of other observational platforms as well:

The tremendous amount of information produced has provided astronomers with an incredibly detailed understanding of supernovae and nebula formation.

The tremendous amount of information produced has provided astronomers with an incredibly detailed understanding of supernovae and nebula formation.

What I find so interesting about this progression lies in what might be called the ‘amazing complicating grain.’ What do I mean by this? Well, there’s the myriad ways the accumulation of data feeds theory formation, of course, how scientific models tend to become progressively more accurate as the kinds and quantities of information accessed increases. But what I’m primarily interested in is what happens when you turn this structure upside down, when you look at the Chinese ‘guest star’ or Anasazi petroglyph against the baseline of what we presently know. What assumptions were made and why? How were those assumptions overthrown? Why were those assumptions almost certain to be wrong?

Why, for instance, did the Chinese assume that SN1054 was simply another star, notable only for its ‘guest-like’ transience? I’m sure a good number of people might think this is a genuinely stupid question: the imperialistic nature of our preconceptions seems to go without saying. The medieval Chinese thought SN1054 was another star rather than a supernova simply because points of light in the sky, stars, were pretty much all they knew. The old provides our only means of understanding the new. This is arguably why Messier first assumed the Crab Nebula was another comet in 1758: it was only when he obtained information distinguishing it (the lack of visible motion) from comets that he realized he was looking at something else, a cloudy celestial object.

But if you think about it, these ‘identification effects’–the ways the absence of systematic differences making systematic differences (or information) underwrite assumptions of ‘default identity’–are profoundly mysterious. Our cosmological understanding has been nothing if not a process of continual systematic differentiation or ever increasing resolution in the polydimensional sense of the natural. In a peculiar sense, our ignorance is our fundamental medium, the ‘stuff’ from which the distinctions pertaining to actual cognition are hewn.

.

The Superunknown

Another way to look at this transformation of detail and understanding is in terms of ‘unknown unknowns,’ or as I’ll refer to it here, the ‘superunknown’ (cue crashing guitars). The Hubble image and the Anasazi petroglyph not only provide drastically different quantities of information organized in drastically different ways, they anchor what might be called drastically different information ecologies. One might say that they are cognitive ‘tools,’ meaningful to the extent they organize interests and practices, which is to say, possess normative consequences. Or one might say they are ‘representations,’ meaningful insofar as they ‘correspond’ to what is the case. The perspective I want to take here, however, is natural, that of physical systems interacting with physical systems. On this perspective, information our brain cannot access makes no difference to cognition. All the information we presently possess regarding supernova and nebula formulation simply was not accessible to the ancient Anasazi or Chinese. As a result, it simply could not impact their attempts to cognize SN-1054. More importantly, not only did they lack access to this information, they also lacked access to any information regarding this lack of information. Their understanding was their only understanding, hedged with portent and mystery, certainly, but sufficient for their practices nonetheless.

The bulk of SN-1054 as we know it, in other words, was superunknown to our ancestors. And, the same as the spark-plugs in your garage make no difference to the operation of your car, that information made no cognizable difference to the way they cognized the skies. The petroglyph understanding of the Anasazi, though doubtless hedged with mystery and curiosity, was for them the entirety of their understanding. It was, in a word, sufficient. Here we see the power–if it can be called such–exercised by the invisibility of ignorance. Who hasn’t read ancient myths or even contemporary religious claims and wondered how anyone could have possibly believed such ‘nonsense’? But the answer is quite simple: those lacking the information and/or capacity required to cognize that nonsense as nonsense! They left the spark-plugs in the garage.

Thus the explanatory ubiquity of ‘They didn’t know any better.’ We seem to implicitly understand, if not the tropistic or mechanistic nature of cognition, then at least the ironclad correlation between information availability and cognition. This is one of the cornerstones of what is called ‘mindreading,’ our ability to predict, explain, and manipulate our fellows. And this is how the superunknown, information that makes no cognizable difference, can be said to ‘make a difference’ after all–and a profound one at that. The car won’t run, we say, because the spark-plugs are in the garage. Likewise, medieval Chinese astronomers, we assume, believed SN-1054 was a novel star because telescopes, among other things, were in the future. In other words, making no difference makes a difference to the functioning of complex systems attuned to those differences.

This is the implicit foundational moral of Plato’s Allegory of the Cave: How can shadows come to seem real? Well, simply occlude any information telling you otherwise. Next to nothing, in other words, can strike us as everything there is, short of access to anything more–such as information pertaining to the possibility that there is something more. And this, I’m arguing, is the best way of looking at human metacognition at any given point in time, as a collection of prisoners chained inside the cave of our skull assuming they see everything there is to see for the simple want of information–that the answer lies in here somehow! On the one hand we have the question of just what neural processing gets ‘lit up’ in conscious experience (say, via information integration or EMF effects) given that an astronomical proportion of it remains ‘dark.’ What are the contingencies underwriting what accesses what for what function? How heuristically constrained are those processes? On the other hand we have the problem of metacognition, the question of the information and cognitive resources available for theoretical reflection on the so-called ‘first-person.’ And, once again, what are the contingencies underwriting what accesses what for what function? How heuristically constrained are those processes?

The longer one mulls these questions, the more the concepts of traditional philosophy of mind come to resemble Anasazi petroglyphs–which is to say, an enterprise requiring the superunknown. Placed on this continuum of availabilty, the assumption that introspection, despite all the constraints it faces, gets enough of the information it needs to at least roughly cognize mind and consciousness as they are becomes at best, a claim crying out for justification, and at worst, wildly implausible. To say philosophy lacks the information and/or cognitive resources it requires to resolve its debates is a platitude, one so worn as not chafe any contemplative skin whatsoever. No enemy is safer or more convenient as an old enemy, and skepticism is as ancient as philosophy itself. But to say that science is showing that metacognition lacks the information and/or cognitive resources philosophy requires to resolve its debates is to say something quite a bit more prickly.

Cognitive science is revealing the superunknown of the soul as surely as astronomy and physics are revealing the superunknown of the sky. Whether we move inward or outward the process is pretty much the same, as we should suspect, given that the soul is simply more nature. The tie-dye knot of conscious experience has been drawn from the pot and is slowly being unravelled, and we’re only now discovering the fragmentary, arbitrary, even ornamental nature of what we once counted as our most powerful and obvious ‘intuitions.’

This is how the Blind Brain Theory treats the puzzles of the first-person: as artifacts of illusion and neglect. The informatic and heuristic resources available for cognition at any given moment constrains what can be cognized. We attribute subjectivity to ourselves as well as to others, not because we actually have subjectivity, but because it’s the best we can manage given the fragmentary information we got. Just as the medieval Chinese and Anasazi were prisoners of their technical limitations, you and I are captives of our metacognitive neural limitations.

As straightforward as this might sound, however, it turns out to be far more difficult to conceptualize in the first-person than in astronomy. Where the incorporation of the astronomical superunknown into our understanding of SN-1054 seems relatively intuitive, the incorporation of the neural superunknown into our understanding of ourselves threatens to confound intelligibility altogether. So why the difference?

The answer lies in the relation between the information and the cognitive resources we have available. In the case of SN-1054, the information provided happens to be the very information that our cognitive systems have evolved to decipher, namely, environmental information. The information provided by Hubble, for instance, is continuous with the information our brain generally uses to mechanically navigate and exploit our environments—more of the same. In the case of the first-person, however, the information accessed in metacognition falls drastically short what our cognitive systems require to conceive us in an environmentally continuous manner. And indeed, given the constraints pertaining to metacognition, the inefficiencies pertaining to evolutionary youth, the sheer complexity of its object, not to mention its structural complicity with its object, this is precisely what we should expect: selective blindness to whole dimensions of information.

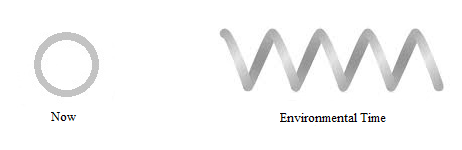

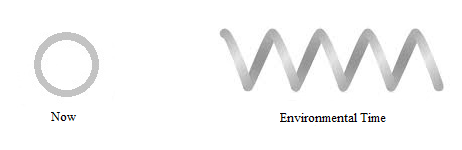

So one might visualize the difference between the Anasazi and our contemporary astronomical understanding of SN-1054 as progressive turns of a screw:

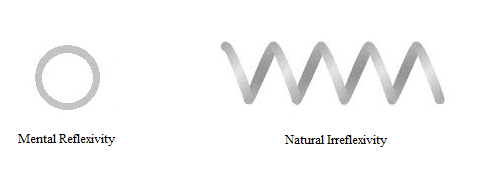

where the contemporary understanding can be seen as adding more and more information, ‘twists,’ to the same set of dimensions. The difference between our intuitive and our contemporary neuroscientific understanding of ourselves, on the other hand, is more like:

where our screw is viewed on end instead of from the side, occluding the dimensions constitutive of the screw. The ‘O’ is actually a screw, obviously so, but for the simple want of information appears to be something radically different, something self-continuous and curiously flat, completely lacking empirical depth. Since these dimensions remain superunknown, they quite simply make no metacognitive difference. In the same way additional environmental information generally always complicates prior experiential and cognitive unities, the absence of information can be seen as simplifying experiential unities. Paint can become swarming ants, and swarming ants can look like paint. The primary difference with the first-person, once again, is that the experiential simplification you experience, say, watching a movie scene fade to white is ‘bent’ across entire dimensions of missing information—as is the case with our ‘O’ and our screw. The empirical depth of the latter is folded into the flat continuity of the former. On this line of interpretation, the first-person is best understood as a metacognitive version of the ‘flicker fusion’ effect in psycho-physics, or the way sleep can consign an entire plane flight to oblivion. You might say that neglect is the sleep of identity.

As the only game in information town, the ‘O’ intuitively strikes us as essentially what we are, rather than a perspectival artifact of information scarcity and heuristic inapplicability. And since this ‘O’ seems to frame the possibility of the screw, things are apt to become more confusing still, with proponents of ‘O’-ism claiming the ontological priority of an impoverished cognitive perspective over ‘screwism’ and its embarrassment of informatic riches, and with proponents of screwism claiming the reverse, but lacking any means to forcefully extend and demonstrate their counterintuitive positions.

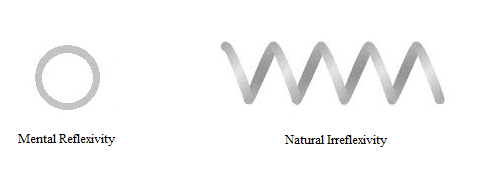

One can analogically visualize this competition of framing intuitions as the difference between,

where the natural screw takes the ‘O’ as its condition, and,

where the natural screw takes the ‘O’ as its condition, and,

where the ‘O’ takes the natural screw as its condition, with the caveat that one understands the margins of the ‘O’ asymptotically, which is to say, as superunknown. A better visualize analogy lies in the margins of your present visual field, which is somehow bounded without possessing any visible boundary. Since the limits of conscious cognition always outrun the possibility of conscious cognition, conscious cognition, or ‘thought,’ seems to hang ‘nowhere,’ or at the very least ‘beyond’ the empirical, rendering the notion of ‘transcendental constraint’ an easy-to-intuit metacognitive move.

where the ‘O’ takes the natural screw as its condition, with the caveat that one understands the margins of the ‘O’ asymptotically, which is to say, as superunknown. A better visualize analogy lies in the margins of your present visual field, which is somehow bounded without possessing any visible boundary. Since the limits of conscious cognition always outrun the possibility of conscious cognition, conscious cognition, or ‘thought,’ seems to hang ‘nowhere,’ or at the very least ‘beyond’ the empirical, rendering the notion of ‘transcendental constraint’ an easy-to-intuit metacognitive move.

In this way, one might diagnose the constitutive transcendental as a metacognitive artifact of neglect. A symptom of brain blindness.

This is essentially the ambit of the Blind Brain Theory: to explain the incompatibility of the intentional with the natural in terms of what information we should expect to be available to metacognition. Insofar as the whole of traditional philosophy turns on ‘reflection,’ BBT amounts to a wholesale reconceptualization of the philosophical tradition as well. It is, without any doubt, the most radical parade of possibilities to ever trammel my imagination—a truly post-intentional philosophy—and I feel as though I have just begun to chart the troubling extent of its implicature. The motive of this piece is to simply convey the gestalt of this undiscovered country with enough sideways clarity to convince a few daring souls to drag out their theoretical canoes.

To summarize then: Taking the mechanistic paradigm of the life sciences as our baseline for ontological accuracy (and what else would we take?), the mental can be reinterpreted in terms of various kinds of dimensional loss. What follows is a list of some of these peculiarities and a provisional sketch of their corresponding ‘blind brain’ explanation. I view each of these theoretical vignettes as nothing more than an inaugural attempt, pixillated petroglyphs that are bound to be complicated and refined should the above hunches find empirical confirmation. If you find yourself reading with a squint, I ask only that you ponder the extraordinary fact that all these puzzling phenomena are characterized by missing information. Given the relation between information availability and cognitive reliability, is it simply a coincidence that we find them so difficult to understand? I’ll attempt to provide ways to visualize these sketches to facilitate understanding where I can, keeping in mind the way diagrams both elide and add dimensions.

.

Concept/Intuition Kind of Informatic Loss/Incapacity

Nowness – Insufficient temporal information regarding the time of information processing is integrated into conscious awareness. Metacognition, therefore, cannot make second-order before-and-after distinctions (or, put differently, is ‘laterally insensitive’ to the ‘time of timing’), leading to the faulty assumption of second-order temporal identity, and hence the ‘paradox of the now’ so famously described by Aristotle and Augustine.

So again, metacognitive neglect means our brains simply cannot track the time of their own operations the way it can track the time of the environment that systematically engages it. Since the absence of information is the absence of distinctions, our experience of time as metacognized ‘fuses’ into the paradoxical temporal identity in difference we term the now.

So again, metacognitive neglect means our brains simply cannot track the time of their own operations the way it can track the time of the environment that systematically engages it. Since the absence of information is the absence of distinctions, our experience of time as metacognized ‘fuses’ into the paradoxical temporal identity in difference we term the now.

Reflexivity – Insufficient temporal information regarding the time of information processing is integrated into conscious awareness. Metacognition, therefore, can only make granular second-order sequential distinctions, leading to the faulty metacognitive assumption of mental reflexivity, or contemporaneous self-relatedness (either intentional as in the analytic tradition, or nonintentional as well, as posited in the continental tradition), the sense that cognition can be cognized as it cognizes, rather than always only post facto. Thus, once again, the mysterious (even miraculous) appearance of the mental, since mechanically, all the processes involved in the generation of consciousness are irreflexive. Resources engaged in tracking cannot themselves be tracked. In nature the loop can be tightened, but never cinched the way it appears to be in experience.

Once again, experience as metacognized fuses, consigning vast amounts of information to the superunknown, in this case, the dimension of irreflexivity. The mental is not only flattened into a mere informatic shadow, it becomes bizarrely self-continuous as well.

Once again, experience as metacognized fuses, consigning vast amounts of information to the superunknown, in this case, the dimension of irreflexivity. The mental is not only flattened into a mere informatic shadow, it becomes bizarrely self-continuous as well.

Personal Identity – Insufficient information regarding the sequential or irreflexive processing of information integrated into conscious awareness, as per above. Metacognition attributes psychological continuity, even ontological simplicity, to ‘us’ simply because it neglects the information required to cognize myriad, and many cases profound, discontinuities. The same way sleep elides travel, making it seem like you simply ‘awaken someplace else,’ so too does metacognitive neglect occlude any possible consciousness of moment to moment discontinuity.

Conscious Unity – Insufficient information regarding the disparate neural complexities responsible for consciousness. Metacognition, therefore, cannot make the relevant distinctions, and so assumes unity. Once again, the mundane assertion of identity in the absence of distinctions is the culprit. So the character below strikes us as continuous,

X

even though it is actually composite,

simply for want of discriminations, or additional information.

Meaning Holism – Insufficient information regarding the disparate neural complexities responsible for conscious meaning. Metacognition, therefore, cannot make the high-dimensional distinctions required to track external relations, and so mistakes the mechanical systematicity of the pertinent neural structures and functions (such as the neural interconnectivity requisite for ‘winner take all’ systems) for a lower dimensional ‘internal relationality.’ ‘Meaning,’ therefore, appears to be differential in some elusive formal, as opposed to merely mechanical, sense.

Volition – Insufficient information regarding neural/environmental production and attenuation of behaviour integrated into conscious awareness. Unable to track the neurofunctional provenance of behaviour, metacognition posits ‘choice,’ the determination of behaviour ex-nihilo.

Once again, the lack of access to a given dimension of information forces metacognition to rely on an ad hoc heuristic, ‘choice,’ which only becomes a problem when theoretical metacognition, blind to its heuristic occlusion of dimensionality, feeds it to cognitive systems primarily adapted to high-dimensional environmental information.

Once again, the lack of access to a given dimension of information forces metacognition to rely on an ad hoc heuristic, ‘choice,’ which only becomes a problem when theoretical metacognition, blind to its heuristic occlusion of dimensionality, feeds it to cognitive systems primarily adapted to high-dimensional environmental information.

Purposiveness – Insufficient information regarding neural/environmental production and attenuation of behaviour integrated into conscious awareness. Cognition thus resorts to noncausal heuristics keyed to solving behaviours rather than those keyed to solving environmental regularities—or ‘mindreading.’ Blind to the heuristic nature of these systems, theoretical metacognition attributes efficacy to predicted outcomes. Constraint is intuited in terms of the predicted effect of a given behaviour as opposed to its causal matrix. What comes after appears to determine what comes before, or ‘cranes,’ to borrow Dennett’s metaphor, become ‘skyhooks.’ Situationally adapted behaviours become ‘goal-directed actions.’

Value – Insufficient information regarding neural/environmental production and attenuation of behaviour integrated into conscious awareness. Blind to the behavioural feedback dynamics that effect avoidance or engagement, metacognition resorts to heuristic attributions of ‘value’ to effect further avoidance or engagement (either socially or individually). Blind to the radically heuristic nature of these attributions, theoretical metacognition attributes environmental reality to these attributions.

Normativity – Insufficient information regarding neural/environmental production and attenuation of behaviour integrated into conscious awareness. Cognition thus resorts to noncausal heuristics geared to solving behaviours rather than those geared to solving environmental regularities. Blind to these heuristic systems, deliberative metacognition attributes efficacy or constraint to predicted outcomes. Constraint is intuited in terms of the predicted effect of a given behaviour as opposed to its causal matrix. What comes after appears to determine what comes before. Situationally adapted behaviours become ‘goal-directed actions.’ Blind to the dynamics of those behavioural patterns producing environmental effects that effect their extinction or reproduction (that generate attractors), metacognition resorts to drastically heuristic attributions of ‘rightness’ and ‘wrongness,’ further effecting the extinction or reproduction of behavioural patterns (either socially or individually). Blind to the heuristic nature of these attributions, theoretical metacognition attributes environmental reality to them. Behavioural patterns become ‘rules,’ apparent noncausal constraints.

Aboutness (or Intentionality Proper) – Insufficient information regarding processing of environmental information integrated into conscious awareness. Even though we are mechanically embedded as a component of our environments, outside of certain brute interactions, information regarding this systematic causal interrelation is unavailable for cognition. Forced to cognize/communicate this relation absent this causal information, metacognition resorts to ‘aboutness’. Blind to the radically heuristic nature of aboutness, theoretical metacognition attributes environmental reality to the relation, even though it obviously neglects the convoluted circuit of causal feedback that actually characterizes the neural-environmental relation.

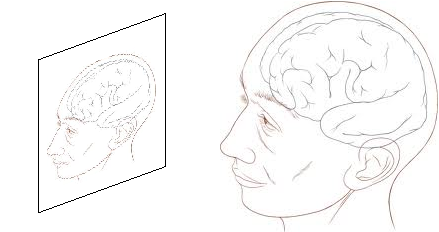

The easiest way to visualize this dynamic is to evince it as,

where the screw diagrams the dimensional complexity of the natural within an apparent frame that collapses many of those dimensions—your present ‘first person’ experience of seeing the figure above. This allows us to complicate the diagram thus,

where the screw diagrams the dimensional complexity of the natural within an apparent frame that collapses many of those dimensions—your present ‘first person’ experience of seeing the figure above. This allows us to complicate the diagram thus,

bearing in mind that the explicit limit of the ‘O’ diagramming your first-person experiential frame is actually implicit or asymptotic, which is to say, occluded from conscious experience as it was in the initial diagram. Since the actual relation between ‘you’ (or your ‘thought,’ or your ‘utterance,’ or your ‘belief,’ and ‘etc.’) and what is cognized/perceived—experienced—outruns experience, you find yourself stranded with the bald fact of a relation, an ineluctable coincidence of you and your object, or ‘aboutness,’

bearing in mind that the explicit limit of the ‘O’ diagramming your first-person experiential frame is actually implicit or asymptotic, which is to say, occluded from conscious experience as it was in the initial diagram. Since the actual relation between ‘you’ (or your ‘thought,’ or your ‘utterance,’ or your ‘belief,’ and ‘etc.’) and what is cognized/perceived—experienced—outruns experience, you find yourself stranded with the bald fact of a relation, an ineluctable coincidence of you and your object, or ‘aboutness,’

where ‘you’ simply are related to an independent object world. The collapse of the causal dimension of your environmental relatedness into the superunknown requires a variety of ‘heuristic fixes’ to adequately metacognize. This then provides the basis for the typically mysterious metacognitive intuitions that inform intentional concepts such as representation, reference, content, truth, and the like.

where ‘you’ simply are related to an independent object world. The collapse of the causal dimension of your environmental relatedness into the superunknown requires a variety of ‘heuristic fixes’ to adequately metacognize. This then provides the basis for the typically mysterious metacognitive intuitions that inform intentional concepts such as representation, reference, content, truth, and the like.

Representation – Insufficient information regarding processing of environmental information integrated into conscious awareness. Even though we are mechanically embedded as a component of our environments, outside of certain brute interactions, information regarding this systematic causal interrelation is unavailable for cognition. Forced to cognize/communicate this relation absent this causal information, metacognition resorts to ‘aboutness’. Blind to the radically heuristic nature of aboutness, theoretical metacognition attributes environmental reality to the relation, even though it obviously neglects the convoluted circuit of causal feedback that actually characterizes the neural-environmental relation. Subsequent theoretical analysis of cognition, therefore, attributes aboutness to the various components apparently identified, producing the metacognitive illusion of representation.

Our implicit conscious experience of some natural phenomenon,

becomes explicit,

becomes explicit,

replacing the simple unmediated (or ‘transparent’) intentionality intuited in the former with a more complex mediated intentionality that is more easily shoehorned into our natural understanding of cognition, given that the latter deals in complex mechanistic mediations of information.

replacing the simple unmediated (or ‘transparent’) intentionality intuited in the former with a more complex mediated intentionality that is more easily shoehorned into our natural understanding of cognition, given that the latter deals in complex mechanistic mediations of information.

Truth – Insufficient information regarding processing of environmental information integrated into conscious awareness. Even though we are mechanically embedded as a component of our environments, outside of certain brute interactions, information regarding this systematic causal interrelation is unavailable for cognition. Forced to cognize/communicate this relation absent this causal information, metacognition resorts to ‘aboutness’. Since the mechanical effectiveness of any specific conscious experience is a product of the very system occluded from metacognition, it is intuited as given in the absence of exceptions—which is to say, as ‘true.’ Truth is the radically heuristic way the brain metacognizes the effectiveness of its cognitive functions. Insofar as possible exceptions remain superunknown, the effectiveness of any relation metacognized as ‘true’ will remain apparently exceptionless, what obtains no matter how we find ourselves environmentally embedded—as a ‘view from nowhere.’ Thus your ongoing first-person experience of,

will be implicitly assumed true period, or exceptionless, (sufficient for effective problem solving in all ecologies), barring any quirk of information availability (‘perspective’) that flags potential problem solving limitations, such as a diagnosis of psychosis, awakening from a nap, the use of a microscope, etc. This allows us to conceive the natural basis for the antithesis between truth and context: as a heuristic artifact of neglect, truth literally requires the occlusion of information pertaining to cognitive function to be metacognitively intuited.

will be implicitly assumed true period, or exceptionless, (sufficient for effective problem solving in all ecologies), barring any quirk of information availability (‘perspective’) that flags potential problem solving limitations, such as a diagnosis of psychosis, awakening from a nap, the use of a microscope, etc. This allows us to conceive the natural basis for the antithesis between truth and context: as a heuristic artifact of neglect, truth literally requires the occlusion of information pertaining to cognitive function to be metacognitively intuited.

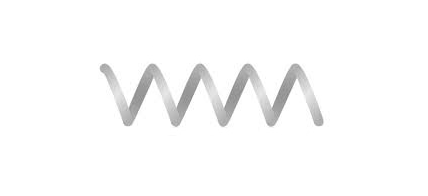

So in terms of our visual analogy, truth can be seen as the cognitive aspect of ‘O,’ how the screw of nature appears with most of its dimensions collapsed, as apparently ‘timeless and immutable,’

for simple want of information pertaining to its concrete contingencies. As more and more of the screw’s dimensions are revealed, however, the more temporal and mutable—contingent—it becomes. Truth evaporates… or so we intuit.

for simple want of information pertaining to its concrete contingencies. As more and more of the screw’s dimensions are revealed, however, the more temporal and mutable—contingent—it becomes. Truth evaporates… or so we intuit.

.

Lacuna Obligata

Given the facility with which Blind Brain Theory allows these concepts to be naturally reconceptualised, my hunch is that many others may be likewise demystified. Aprioricity, for instance, clearly turns on some kind of metacognitive ‘priority neglect,’ whereas abstraction clearly involves some kind of ‘grain neglect.’ It’s important to note that these diagnoses do not impeach the implicit effectiveness of many of these concepts so much as what theoretical metacognition, or ‘philosophical reflection,’ has generally made of them. It is precisely the neglect of information that allows our naive employment of these heuristics to be effective within the limited sphere of those problem ecologies they are adapted to solve. This is actually what I think the later Wittgenstein was after in his attempts to argue the matching of conceptual grammars with language-games: he simply lacked the conceptual resources to see that normativity and aboutness were of a piece. It is only when philosophers, as reliant upon deliberative theoretical metacognition as they are, misconstrue what are parochial problem solvers for more universal ones, that we find ourselves in the intractable morass that is traditional philosophy.

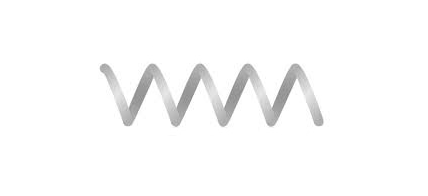

To understand the difference between the natural and the first-person we need a positive way to characterize that difference. We have to find a way to let that difference make a difference. Neglect is that way. The trick lies in conceiving the way the neglect of various dimensions of information dupes theoretical metacognition into intuiting the various structural peculiarities traditionally ascribed to the first-person. So once again, where running the clock of astronomical discovery backward merely subtracts information from a fixed dimensional frame,

explaining the first-person requires the subtraction of dimensions as well,

explaining the first-person requires the subtraction of dimensions as well,

that we engage in a kind of ‘conceptual origami,’ conceive the first-person, in spite of its intuitive immediacy, as what the brain looks like when whole dimensions of information are folded away.

that we engage in a kind of ‘conceptual origami,’ conceive the first-person, in spite of its intuitive immediacy, as what the brain looks like when whole dimensions of information are folded away.

And this, I realize, is not easy. Nevertheless, tracking environments requires resources which themselves cannot be tracked, thus occluding cognitive neurofunctionality from cognition—imposing ‘medial neglect.’ The brain thus becomes superunknown relative to itself. Its own astronomical complexity is the barricade that strands metacognitive intuitions with intentionality as traditionally conceived. Anyone disagreeing with this needs to explain how it is human metacognition overcomes this boggling complexity. Otherwise, all the provocative questions raised here remain: Is it simply a coincidence that intentional concepts exhibit such similar patterns of information privation? For instance, is it a coincidence that the curious causal bottomlessness that haunts normativity—the notion of ‘rules’ and ‘ends’ somehow ‘constraining’ via some kind of obscure relation to causal mechanism—also haunts the ‘aiming’ that informs conceptions of representation? Or volition? Or truth?

The Blind Brain theory says, Nope. If the lack of information, the ‘superunknown,’ is what limits our ability to cognize nature, then it makes sense to assume that it also limits our ability to cognize ourselves. If the lack of information is what prevents us from seeing our way past traditional conceits regarding the world, it makes sense to think it also prevents us from seeing our way past cherished traditional conceits regarding ourselves. If information privation plays any role in ignorance or misconception at all, we should assume that the grandiose edifice of traditional human self-understanding is about to founder in the ongoing informatic Flood…

That what we have hitherto called ‘human’ has been raised upon shades of cognition obscura.

The tremendous amount of information produced has provided astronomers with an incredibly detailed

The tremendous amount of information produced has provided astronomers with an incredibly detailed